ICLR Workshop Challenge #2: Radiant Earth Computer Vision for Crop Detection from Satellite Imagery

Meet the winners of ICLR Workshop Challenge #2: Radiant Earth

Agriculture is the driving engine for economic growth in many countries, particularly in the global development community. Therefore, accurate and reliable agricultural data around the world is critical to global resilience and food security. These data are also essential to monitor the progress toward several UN Sustainable Development Goals, including ending poverty, zero hunger, economic growth and more.

Earth observations (EO) provide invaluable data at different spatial and temporal scales and at consistent frequencies. These data can be used to build models for agricultural monitoring, increasing farmers productivity and enhancing the impact of intervention mechanisms.

In contrast with a survey, agricultural maps based on satellite data provide a more accurate insight to stakeholders. While traditional data collection only provides aggregated information about regions as a whole—with statistical uncertainty due to regional limitations— Earth observations can provide data at scale with high spatial granularity.

The objective of this competition is to create a machine learning model to classify fields by crop type from images collected during the growing season by the Sentinel-2 satellite. The fields pictured in this training set are across western Kenya, and the images were collected by the PlantVillage team.

The dataset contains a total of more than 4,000 field images. The data you will have access to includes 12 bands of observations from Sentinel-2 L2A product (observations in the ultra-blue, blue, green, red; visible and near-infrared (VNIR); and short wave infrared (SWIR) spectra), as well as a cloud probability layer. The bands are mapped to a common 10x10m spatial resolution grid.

Western Kenya, where the data was collected is dominated by smallholder farms, which is common across Africa, and poses a challenge to build crop type classification from Sentinel-2 data. Moreover, the class imbalance in the dataset may need to be taken into account for building a model that performs evenly across all classes.

This competition is part of the Computer Vision for Agriculture (CV4A) Workshop at the 2020 ICLR conference and is designed and organized by Radiant Earth Foundation with support from PlantVillage in providing the ground reference data. Competition prizes are sponsored by Microsoft AI for Earth and Descartes Labs.

About the Computer Vision for Agriculture (CV4A) Workshop and ICLR (cv4gc.org):

Artificial intelligence has invaded the agriculture field during the last few years. From automatic crop monitoring via drones, smart agricultural equipment, food security and camera-powered apps assisting farmers to satellite imagery-based global crop disease prediction and tracking, computer vision has been a ubiquitous tool. The Computer Vision for Agriculture (CV4A) workshop aims to expose the fascinating progress and unsolved problems of computational agriculture to the AI research community. It is jointly organized by AI and computational agriculture researchers and has the support of CGIAR. It will be a full-day event and will feature invited speakers, poster and spotlight presentations, a panel discussion and (tentatively) a mentoring/networking dinner.

About The International Conference on Learning Representations (ICLR) (iclr.cc):

ICLR is the premier gathering of professionals dedicated to the advancement of the branch of artificial intelligence called representation learning, but generally referred to as deep learning.

ICLR is globally renowned for presenting and publishing cutting-edge research on all aspects of deep learning used in the fields of artificial intelligence, statistics and data science, as well as important application areas such as machine vision, computational biology, speech recognition, text understanding, gaming, and robotics.

Participants at ICLR span a wide range of backgrounds, from academic and industrial researchers, to entrepreneurs and engineers, to graduate students and postdocs.

About Radiant Earth (radiant.earth):

Founded in 2016, Radiant Earth Foundation is a nonprofit organization focused on empowering organizations and individuals globally with open Artificial Intelligence (AI) and Earth observations (EO) data, standards and tools to address the world’s most critical international development challenges. With broad experience as a neutral entity working with commercial, academic, governmental and non-governmental partners to expand EO data and information used in the global development sector, Radiant Earth Foundation recognizes the opportunity that exists today to advance new applications and products through AI and machine learning (ML).

To fill this need, Radiant Earth has established Radiant MLHub as an open ML commons for EO. Radiant MLHub is an open digital data repository that allows anyone to discover and access high-quality Earth observation (EO) training datasets. In addition to discovering others’ data, individuals and organizations can use Radiant MLHub to register or share their own training data, thereby maximizing its reach and utility. Furthermore, Radiant MLHub maps all of the training data that it hosts so stakeholders can easily pinpoint geographical areas from which more data is needed.

About PlantVillage (plantvillage.psu.edu):

PlantVillage is a research and development unit of Penn State University that empowers smallholder farmers and seeks to lift them out of poverty using cheap, affordable technology and democratizing the access to knowledge that can help them grow more food.

The training dataset for this competition is made available under the Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0) license. Therefore, by signing up on Radiant MLHub and accessing the data you agree to the terms of this license.

Teams and collaboration

You may participate in this competition as an individual or in a team of up to four people. When creating a team, the team must have a total submission count less than or equal to the maximum allowable submissions as of the formation date. A team will be allowed the maximum number of submissions for the competition, minus the highest number of submissions among team members at team formation. Prizes are transferred only to the individual players or to the team leader.

Multiple accounts per user are not permitted, and neither is collaboration or membership across multiple teams. Individuals and their submissions originating from multiple accounts will be disqualified.

Code must not be shared privately outside of a team. Any code that is shared, must be made available to all competition participants through the platform. (i.e. on the discussion boards).

Datasets and packages

The solution must use publicly-available, open-source packages only. Your models should not use any of the metadata provided.

You may use only the datasets provided for this competition.

The data used in this competition is the sole property of Zindi and the competition host. You may not transmit, duplicate, publish, redistribute or otherwise provide or make available any competition data to any party not participating in the Competition (this includes uploading the data to any public site such as Kaggle or GitHub). You may upload, store and work with the data on any cloud platform such as Google Colab, AWS or similar, as long as 1) the data remains private and 2) doing so does not contravene Zindi’s rules of use.

You must notify Zindi immediately upon learning of any unauthorised transmission of or unauthorised access to the competition data, and work with Zindi to rectify any unauthorised transmission or access.

Your solution must not infringe the rights of any third party and you must be legally entitled to assign ownership of all rights of copyright in and to the winning solution code to Zindi.

Submissions and winning

You may make a maximum of 10 submissions per day. Your highest-scoring solution on the private leaderboard at the end of the competition will be the one by which you are judged.

As the challenge has now closed, the maximum number of submissions per day is 30.

Zindi maintains a public leaderboard and a private leaderboard for each competition. The Public Leaderboard includes approximately 50% of the test dataset. While the competition is open, the Public Leaderboard will rank the submitted solutions by the accuracy score they achieve. Upon close of the competition, the Private Leaderboard, which covers 100% of the test dataset, will be made public and will constitute the final ranking for the competition. You also agree that your solution will be shared publicly by the organizers on GitHub as a public good to the sector.

If you are in the top 20 at the time the leaderboard closes, we will email you to request your code. On receipt of email, you will have 48 hours to respond and submit your code following the submission guidelines detailed below. Failure to respond will result in disqualification.

If your solution places 1st, 2nd, or 3rd on the final leaderboard, you will be required to submit your winning solution code to us for verification, and you thereby agree to assign all worldwide rights of copyright in and to such winning solution to Zindi.

If two solutions earn identical scores on the leaderboard, the tiebreaker will be the date and time in which the submission was made (the earlier solution will win).

The winners will be paid via bank transfer, PayPal, or other international money transfer platform. International transfer fees will be deducted from the total prize amount, unless the prize money is under $500, in which case the international transfer fees will be covered by Zindi. In all cases, the winners are responsible for any other fees applied by their own bank or other institution for receiving the prize money. All taxes imposed on prizes are the sole responsibility of the winners.

You acknowledge and agree that Zindi may, without any obligation to do so, remove or disqualify an individual, team, or account if Zindi believes that such individual, team, or account is in violation of these rules. Entry into this competition constitutes your acceptance of these official competition rules.

Please refer to the FAQs and Terms of Use for additional rules that may apply to this competition. We reserve the right to update these rules at any time.

Reproducibility

- If your submitted code does not reproduce your score on the leaderboard, we reserve the right to adjust your rank to the score generated by the code you submitted.

- If your code does not run you will be dropped from the top 10. Please make sure your code runs before submitting your solution.

- Always set the seed. Rerunning your model should always place you at the same position on the leaderboard. When running your solution, if randomness shifts you down the leaderboard we reserve the right to adjust your rank to the closest score that your submission reproduces.

- We expect full documentation. This includes:

- All data used

- Output data and where they are stored

- Explanation of features used

- Your solution must include the original data provided by Zindi and validated external data (no processed data)

- All editing of data must be done in a notebook (i.e. not manually in Excel)

Data standards:

- Your submitted code must run on the original train, test, and other datasets provided.

- If external data is allowed it must not exceed 1 GB. External data must be freely and publicly available, including pre-trained models with standard libraries. If external data is allowed, any data used should be shared on the discussion forum.

- Packages:

- You must use the most recent versions of packages. Custom packages in your submission notebook will not be accepted.

- You may only use tools available to everyone i.e. no paid services or free trials that require a credit card.

Consequences of breaking any rules of the competition or submission guidelines:

- First offence: No prizes or points for 6 months. If you are caught cheating all individuals involved in cheating will be disqualified from the challenge(s) you were caught in and you will be disqualified from winning any competitions or Zindi points for the next six months.

- Second offence: Banned from the platform. If you are caught for a second time your Zindi account will be disabled and you will be disqualified from winning any competitions or Zindi points using any other account.

Monitoring of submissions

- We will review the top 20 solutions of every competition when the competition ends.

- We reserve the right to request code from any user at any time during a challenge. You will have 24 hours to submit your code following the rules for code review (see above).

- If you do not submit your code within 24 hours you will be disqualified from winning any competitions or Zindi points for the next six months. If you fall under suspicion again and your code is requested and you fail to submit your code within 24 hours, your Zindi account will be disabled and you will be disqualified from winning any competitions or Zindi points.

Further updates and rulings of note:

- Multiple accounts per user, collaboration or membership across multiple teams are not allowed.

- Code may not be shared privately. Any code that is shared, must be made available to all competition participants through the platform.

- Solutions must use publicly-available, open-source packages only, and all packages must be the most updated versions.

- Solutions must not infringe the rights of any third party and you must be legally entitled to assign ownership of all rights of copyright in and to the winning solution code to Zindi.

- You will be disqualified if you do not respond within the timeframe given in the request for code.

We reserve the right to update these rules at any time.

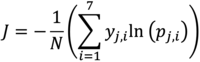

The evaluation metric for this challenge is Cross Entropy with binary outcome for each crop:

In which:

- j indicates the field number (j=1 to N)

- N indicates total number of fields in the dataset (3,361 in the train and 1,436 in the test)

- i indicates the crop type (i=1 to 7)

- y_j,i is the binary (0, 1) indicator for crop type i in field j (each field has only one correct crop type)

- p_j,i is the predicted probability (between 0 and 1) for crop type i in field j

Your submission should include FieldIDs of the fields in the test dataset and corresponding probability for each crop type. Your submission file should look like:

FieldID CropId_1 CropId_2 CropId_3 CropId_4 CropId_5 CropId_6 CropId_7 <integer> <float> <float> <float> <float> <float> <float> <float> 1184 0.14 0.14 0.14 0.14 0.14 0.14 0.16

There are FIVE winners for this competition. Each winner will be invited to take part in the CV4A workshop at ICLR, taking place online 26 April 2020.

- 1st place overall from any country: An invitation to ICLR plus $1 500 USD.

- 1st place African citizen currently residing in Africa: An invitation to ICLR plus 1) $1 000 USD, and 2) 1-yr subscription to Descartes Labs Platform.

- The Naver prize for 1st place female-identified African citizen currently residing in Africa: An invitation to ICLR plus $1,000 USD.

- 2nd place overall from any country: An invitation to ICLR plus $1 000 USD.

- 3rd place overall from any country: An invitation to ICLR plus $500 USD.

Additional conditions to note:

- The Naver prize will be selected first; the African citizen prize will be selected second; the 'overall' prizes will go to the next highest placed remaining winners.

- If a winner is not able to attend ICLR or accept the prize, we reserve the right to assign the prize to another top person or team on the leaderboard.

- If you are working in a team and your team wins, you will need to immediately notify Zindi who will represent the team at ICLR.

- If a team is composed of both (female) African and non-African data scientists, then your team's category will be determined by the gender and nationality of the person you put forward to represent the team at ICLR.

Competition closes on 29 March 2020.

Final submissions must be received by 11:59 PM GMT.

We reserve the right to update the contest timeline if necessary.

Join the largest network for

data scientists and AI builders