Microsoft Rice Disease Classification Challenge

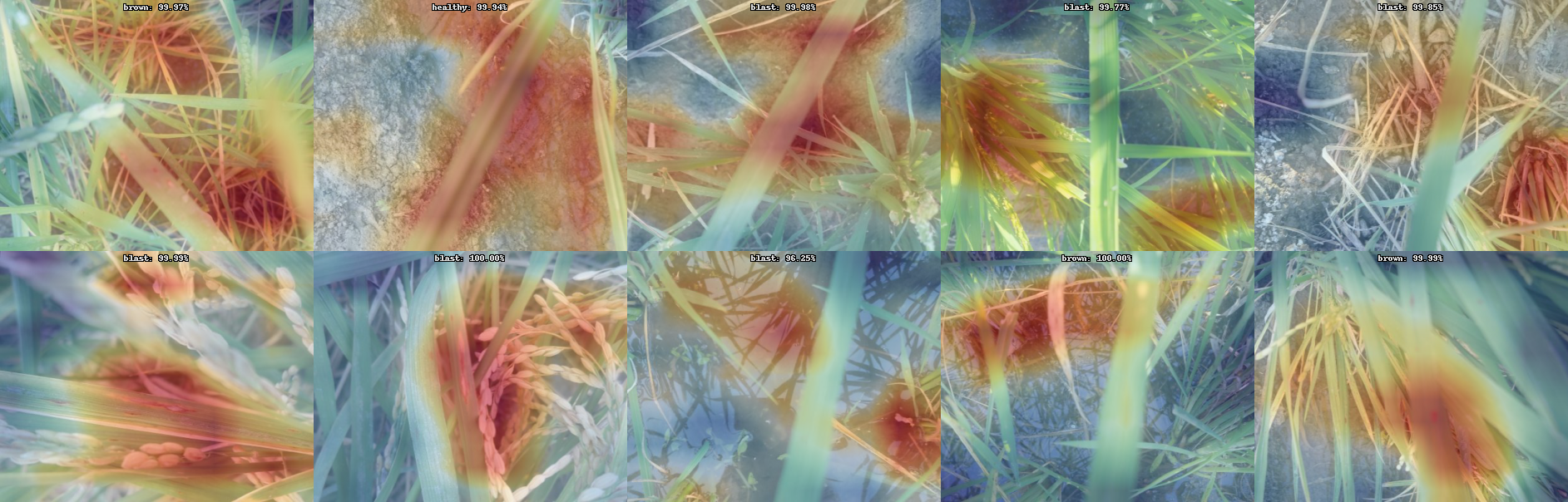

I found it interesting to inspect which parts of the image were most responsible for specific predictions. These are from a not-particularly-good model, and in some places, you can see it seems to focus on the ground/water or other seemingly arbitrary things. Not a perfect method, but interesting and I think something we should be doing.

For those who'd like to try it, I used https://github.com/Synopsis/amalgam with:

pip install fastai-amalgam

from fastai_amalgam.interpret.all import *

learn.gradcam('images/id_004wknd7qd.jpg')

My subjective impression based on a few different models is that they tend to focus on the parts I'd expect at least some of the time, but occasionally seem to derive some information from patches of ground or something. Perhaps the data was collected from fields that are distinguishable by soil type or something? Or I'm just reading too much into a blurry blob :) Anyway, I hope you find this interesting. Good luck all!

Interesting Insights @Johnowhitaker, thanks for this.

Thanks for the sharing

Interesting👍