GeoAI Challege Location Mention Recognition from Social Media by ITU

Hi guys, 🎉 Congratulations to everyone on another amazing competition. For folks curious about how I rose fast in the hackathon to achieve this score within a short time. I guess it was more of reading 📚 and research 🔍 on the dataset and previous works that have been done. So I discovered that Dr Reem Suwaileh had already done a lot of research and achieved very high benchmarks 📈 which are open source on hugging face, so I read them up, and focused more on pre-processing the text (tweet) and post-processing the model outputs. I've done most of my processing techniques in a pictorial view 📷 for easy understanding.

Below are some of the top techniques:

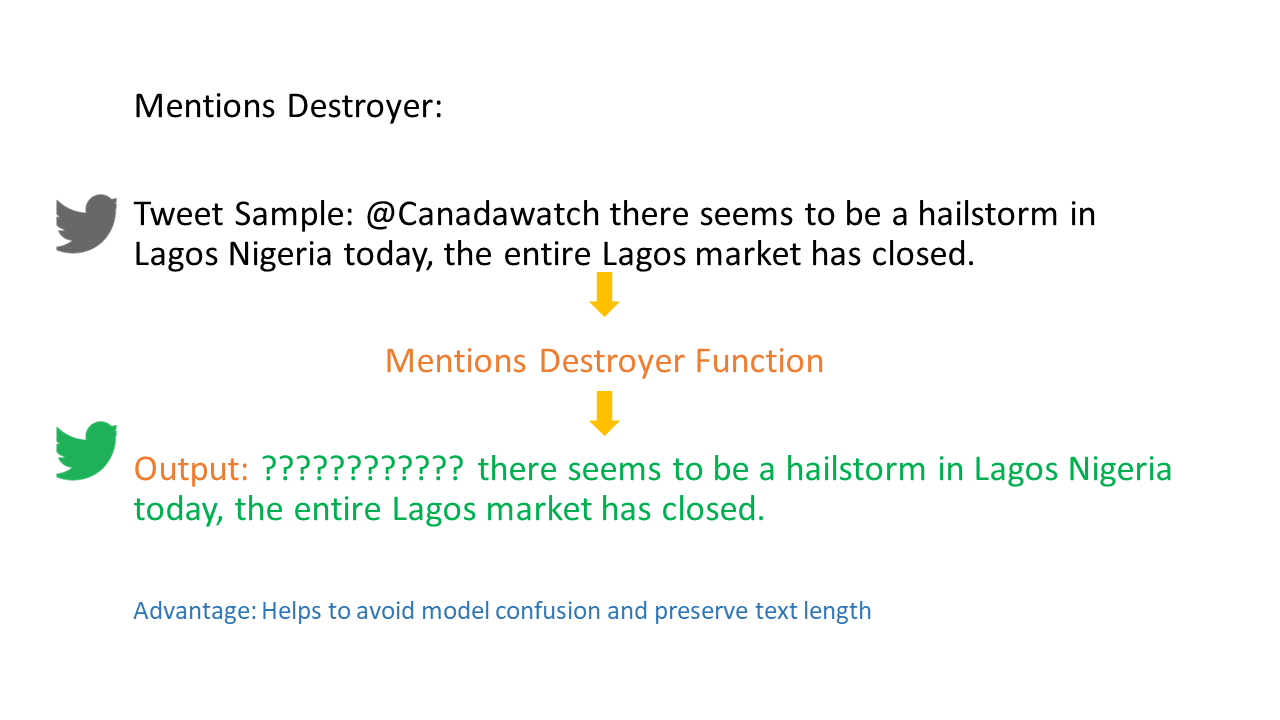

1. Destroy mentions so you don't confuse the model ❌ - You'll probably need to read up the paper or annotation instructions pdf in the dataset to learn more about how the data was annotated.

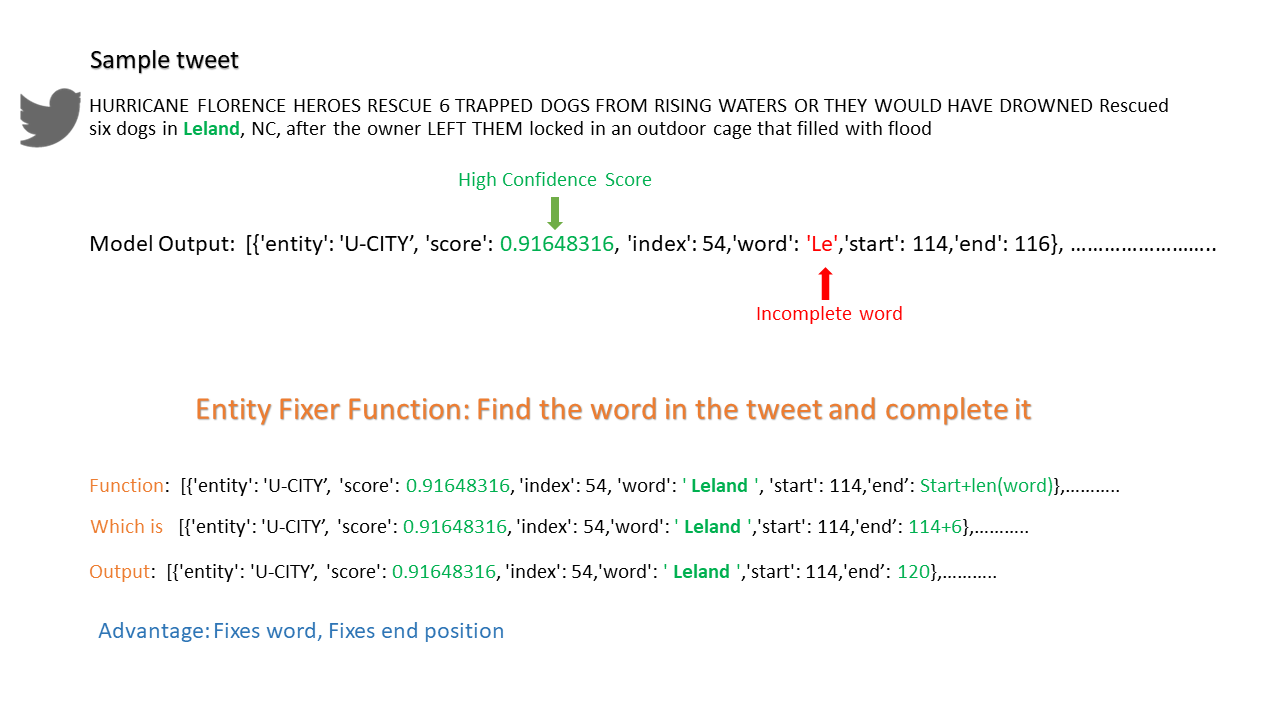

2. Fix entity ✨ - The model performs token classification, so if a word is more than a single token, then the predictions are slightly broken.

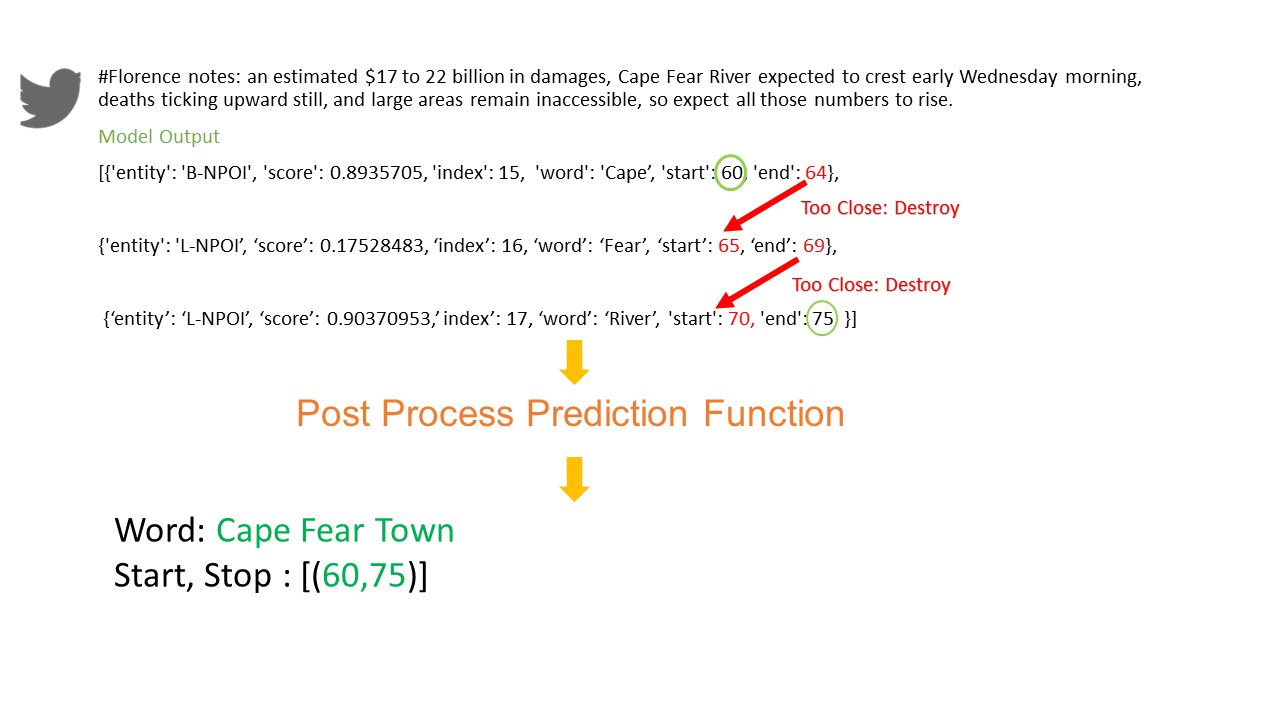

3. Post-Process-Prediction 🛠️: Locations which are more than a single word are sometimes broken down depending on the model in use, but they tend to have super close end and start positions.

I did some other minor stuff but these 3 functions made the real difference. 👍and of course, the solutions in code will be available soon. Congratulations to everyone. #keep learning, #keep winning.

Amazing proff! Congratulations on hacking this one, I did not participate in this one, looked somehow tough and interesting

Yeah! Thanks, Julius, we missed you here 😀.

Amazing boss, Congratulations on winning this .I will read more on Dr Reem Suwaileh works like you mentioned .I look forward to the code,Thanks for the explanation

Thanks a lot, Debola!