Malawi Public Health Systems LLM Challenge

Hi there fellow Zindians!! I hope this message finds you well. I am currently facing a challenge in running @Proffesor's gpu/cpu RAG starter notebook on kaggle and would appreciate if I could get some guidance from the community.

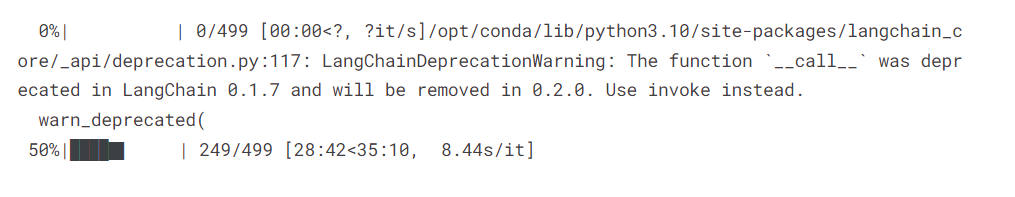

When I run the starter notebook on Kaggle I get a dead kernel error at record 249 of the inference. This is quite peculiar as when I run that question in isolation or a batch of 10 - 100 questions with that question included I do not get any error. Hence I assumed that maybe it was a resource issue due to some memory leakage, however when monitoring the resources nothing strange occurs. I even tried freeing memory every 10 iterations but as soon as it reaches iteration 249 it simply just stops. Although if I run the same code on Colab it runs without any issue. I would appreciate if you could share your insight and assist as I would prefer running my notebooks on kaggle to save compute units. Thank you so much and goodluck!!

Here is some of the logs:

1903.5s 21 File "/opt/conda/lib/python3.10/site-packages/nbclient/client.py", line 730, in _async_poll_for_reply

1903.5s 22 msg = await ensure_async(self.kc.shell_channel.get_msg(timeout=new_timeout))

1903.5s 23 File "/opt/conda/lib/python3.10/site-packages/nbclient/util.py", line 96, in ensure_async

1903.5s 24 result = await obj

1903.5s 25 File "/opt/conda/lib/python3.10/site-packages/jupyter_client/channels.py", line 310, in get_msg

1903.5s 26 ready = await self.socket.poll(timeout)

1903.5s 27 asyncio.exceptions.CancelledError

1903.5s 28

1903.5s 29 During handling of the above exception, another exception occurred:

1903.5s 30

1903.5s 31 Traceback (most recent call last):

1903.5s 32 File "/opt/conda/lib/python3.10/site-packages/nbclient/client.py", line 949, in async_execute_cell

1903.5s 33 exec_reply = await self.task_poll_for_reply

1903.5s 34 asyncio.exceptions.CancelledError

1903.5s 35

1903.5s 36 During handling of the above exception, another exception occurred:

1903.5s 37

1903.5s 38 Traceback (most recent call last):

1903.5s 39 File "<string>", line 1, in <module>

1903.5s 40 File "/opt/conda/lib/python3.10/site-packages/papermill/execute.py", line 119, in execute_notebook

1903.5s 41 nb = papermill_engines.execute_notebook_with_engine(

1903.5s 42 File "/opt/conda/lib/python3.10/site-packages/papermill/engines.py", line 48, in execute_notebook_with_engine

1903.5s 43 return self.get_engine(engine_name).execute_notebook(nb, kernel_name, **kwargs)

1903.5s 44 File "/opt/conda/lib/python3.10/site-packages/papermill/engines.py", line 365, in execute_notebook

1903.5s 45 cls.execute_managed_notebook(nb_man, kernel_name, log_output=log_output, **kwargs)

1903.5s 46 File "/opt/conda/lib/python3.10/site-packages/papermill/engines.py", line 434, in execute_managed_notebook

1903.5s 47 return PapermillNotebookClient(nb_man, **final_kwargs).execute()

1903.5s 48 File "/opt/conda/lib/python3.10/site-packages/papermill/clientwrap.py", line 45, in execute

1903.5s 49 self.papermill_execute_cells()

1903.5s 50 File "/opt/conda/lib/python3.10/site-packages/papermill/clientwrap.py", line 72, in papermill_execute_cells

1903.5s 51 self.execute_cell(cell, index)

1903.5s 52 File "/opt/conda/lib/python3.10/site-packages/nbclient/util.py", line 84, in wrapped

1903.5s 53 return just_run(coro(*args, **kwargs))

1903.5s 54 File "/opt/conda/lib/python3.10/site-packages/nbclient/util.py", line 62, in just_run

1903.5s 55 return loop.run_until_complete(coro)

1903.5s 56 File "/opt/conda/lib/python3.10/asyncio/base_events.py", line 649, in run_until_complete

1903.5s 57 return future.result()

1903.5s 58 File "/opt/conda/lib/python3.10/site-packages/nbclient/client.py", line 953, in async_execute_cell

1903.5s 59 raise DeadKernelError("Kernel died")

1903.5s 60 nbclient.exceptions.DeadKernelError: Kernel died

1905.3s 61 /opt/conda/lib/python3.10/site-packages/traitlets/traitlets.py:2930: FutureWarning: --Exporter.preprocessors=["remove_papermill_header.RemovePapermillHeader"] for containers is deprecated in traitlets 5.0. You can pass `--Exporter.preprocessors item` ... multiple times to add items to a list.

1905.3s 62 warn(

same here when i try the starter's notebook given by @Professor

Hi @Sach, and @GIrum, unfortunately, I don't have access to my PC for now, so I may not be able to do much. However, I'll try to make an edit with my mobile phone to test larger models. But one important point to note is that the textbook data ingested and converted to a vector database is very very dirty, so retrieving data uses lots of ram especially because of the unicode characters. (I left it that way since it's a basic notebook). What you'll want to do is to clean the reference textbook properly.

Some ideas:

If I can, I'll try from my end and let you know. But this should fix your kennel from exploding, and give you a much much better score

Hi @Professor thanks for the response. However I doubt retrieval from the vectordb is actually the problem. I have created an alternate solution where I iterated through every question, retrieved 3 chunks from the vector db, formulated the langchain prompt and stored the prompt in a pandas dataframe. I then imported the df in a separate notebook and ran the prompts through the llm but it still gives the same error.

Interesting. So I take it the problem is on inference of the LLM right. Any idea what the retrieved text (3 chunks) look like? Are there unicode characters in it?

Hi @Sach, and @GIrum, I just successfully ran Llama 13b too without errors. Check my edits here. Let me know if the same data cleaning edits work for your choice model. Raw score on the public LB is 0.38+

ps: it was run on kaggle kennels

Hi @Professor I just tested it with TinyLlama and it is working perfectly. Thank you so much for the assistance and for the starter notebook. As a beginner over the past 3 days I have been searching countless forums to try and find a solution and not once did it ever cross my mind to remove the unicode characters 😂😂 Out of curiosity, what effect does the unicode characters have during inference?

Hi @Sach, the challenge with your kennel dying was the RAM getting maxed out, so the best thing is to conserve memory. You could also use an extremely small model or smaller prompt (tokens) chunks and it would work.

For this scenario, the textbook text contains lots of jargons since an unstructed loader was used. The best thing would be to clean the data and remove the jargons, especially unicode characters which eat up lots of RAM. It's basically all about efficient memory management.