QoS Prediction Challenge by ITU AI/ML in 5G Challenge

3 000 Zindi Points

Completed (over 2 years ago)

Prediction

259 joined

129 active

Start

Jun 02, 23

Close

Jul 15, 23

Reveal

Jul 15, 23

Second Place Solution

Notebooks · 26 Jul 2023, 16:25 · 5

I would like to express my gratitude to Zindi for organizing this competition and providing a platform for participants to showcase their skills and expertise. It was an enriching experience that allowed me to enhance my knowledge and proficiency in machine learning and artificial intelligence.

Solution:

- Include lag features, especially "last timestamp" - Score decreased from 100xxxxx to 94xxxxx.

- Utilize aggregate features grouped by "PCell identity" and "Scell identity" - Score improved from 94xxx to 89xxx.

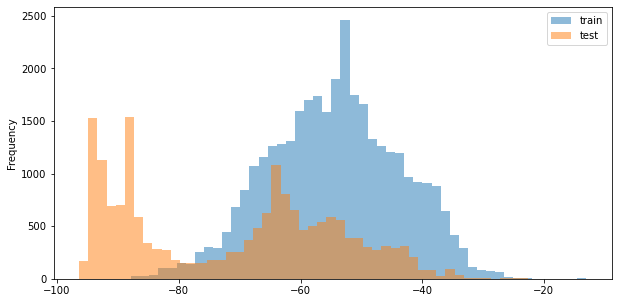

- Drop "PCell_RSSI_max" feature (train-test inconsistency) - Improved score from 89xxxxx to 83xxxxx.

- Final submission using LGBM.

"PCell_RSSI_max" Feature train-test inconsistency :

link to solution: Nizar-Charrada/QoS_Prediction_Challenge_Second_Place_Solution: Second Place solution for QoS_Prediction_Challenge by Zindi (github.com) (edited)

I would also like to hear from @CalebEmelike about their solution on achieving a score below 80xxx. Your insights would be greatly appreciated.

Thank you,

Hi @Charrada thank you for your solution. Mine is the pretty same, just i took lag features on all the columns, I didn't groupby. For me the Lag features did the magic. I also trained on just Catboost.

Congratulations @CalebEmelike and @Charrada, i just have a questions, how did you avoid overfitting when using the Lag features? i also used Lag features with Catboost, even though i was getting RMSE scores of 88.... and 89..., with every submission, the RMSE went as high as 105..., 107.., This was my code

data = data.sort_values(by=['day_of_week', 'hour', 'minute', 'second'])

mask = data['operator'] == 1

shifted_values = data[PCell_cols].shift().where(mask)

PCell_result = data[PCell_cols].where(mask) - shifted_values

PCell_result.fillna(0, inplace=True)

And also using Scell_cols, but it kept overfitting, there seemed to be no way around that, How did you avoid overfitting?

Amazing! I did not figure that out,.. Thanks for sharing and congratulations

Honestly How did you guys think of lagging with the train/test timestamp looking like this?? It seems lagging was the trick

To be honest, I dont observe any evidence in this plot suggesting that lagging features are ineffective. In my experience, it was through trial and error that I found success. Additionally, it's important to note that the last timestamp used was specifically for the corresponding device or operator.