Finetuning Helsinki NLP multilanguage model to translate text from Yorùbá to English.

Prerequisites

A GPU-enabled platform is used for the coding on this blog, e.g., google-colab.

Introduction

This article will build a machine translation model to translate sentences from the Yorùbá language to the English language. These sentences are from various resources such as news articles, conversations on social media, spoken transcripts, and books written purely in the Yorùbá language.

Machine translation for low resource language is quite rare, and its quite hard to get accurate results due to the limited size of available training data for these languages. We have a dataset available for Yoruba text (JW300), but that is used to train in the religions domain. We need a generalized model that can be used in multiple domains. This is where ai4d.ai comes in with more generalized data, and all we have to do is train our model on this data and produce an accurate result to secure the top position in AI4D Yorùbá Machine Translation Challenge — Zindi. We will be using the Helsinki NLP Mode in this project, so let's talk about them as an organization.

Helsinki NLP

A Language Technology Research Group at the University of Helsinki trained Helsinki NLP models. They are on a mission to provide machine translation for all human languages. They also study and develop tools for processing human language, which includes automatic spelling and grammar checking, machine translation, and ASR (automatic speech recognition). For more information visit Language Technology. These models are publicly available at HuggingFace and on GitHub.

This is executed on google colab; therefore, some of the modules like scikit-learn are already pre-installed. However, some need to be installed:

!pip install -q transformers

!pip install -q sentencepiece

Importing essential libraries

import pandas as pd

import re

import string

import torch

from tqdm.notebook import tqdm

tqdm.pandas()

from sklearn.model_selection import train_test_split from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

Selecting options

- To clean your text, you need to make the Clean variable True, but for now, I am training my data without cleaning.

- If you want to train your model, then make the Train variable True.

Clean = False

Train = True

Reading translation data

We will use pandas to read the training data to understand how our dataset looks.

data_path = "/content/" # change this to the path of your data folder

df = pd.read_csv('Train.csv')

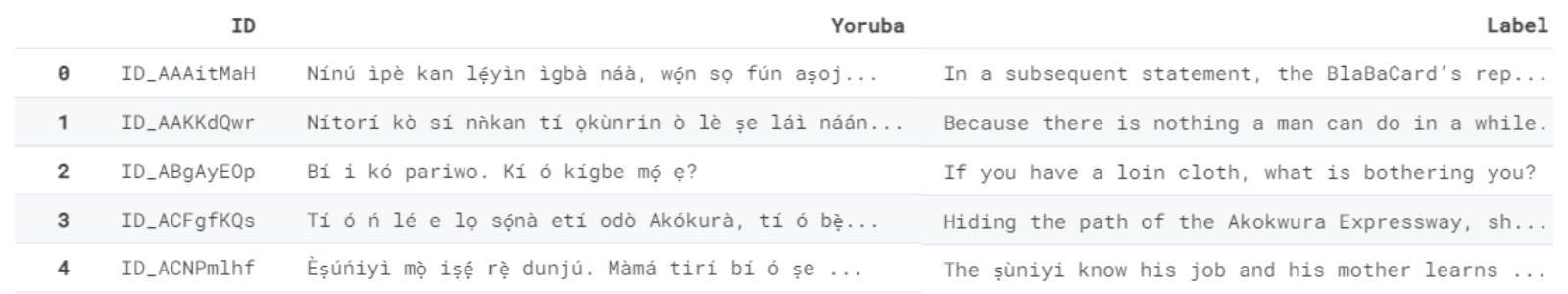

The training data consists of 10,054 parallel Yorùbá-English sentences. We have three column ID: unique identifiers; Yoruba: containing text in Yorùbá language; and English: text contains English translation of Yorùbá text. The data looks fairly clean, and there are no missing values.

df.head()

Cleaning data

We have removed punctuation marks, converted the text to lower case, and removed digits to make our model perform better. I am keeping this feature off, but I will use this for future experiments.

if Clean:

# converting every letter to lower case

df['Yoruba'] = df['Yoruba'].apply(lambda x: str(x).lower())

df['English'] = df['English'].apply(lambda x: str(x).lower())

# removing apostrophe from the sentences

df['Yoruba'] = df['Yoruba'].apply(lambda x: re.sub("'","",x))

df['English'] = df['English'].apply(lambda x: re.sub("'","",x))

exclude = set(string.punctuation)

# removing all the punctuations

df['Yoruba'] = df['Yoruba'].apply(lambda x: ''.join(ch for ch in x if ch not in exclude))

df['English'] = df['English'].apply(lambda x: ''.join(ch for ch in x if ch not in exclude))

# removing digits from the sentences

digit = str.maketrans('','',string.digits)

df['Yoruba'] = df['Yoruba'].apply(lambda x: x.translate(digit))

df['English'] = df['English'].apply(lambda x: x.translate(digit))

Loading Tokenizer and model

We used hidden gems of machine translation, trained in multiple languages including Yoruba. The Helsinki NLP models are one of the best in the machine language translation domain and they come with transfer learning, which means we can use the same model with the same weights and fine-tune it on our new data to get the best results. We will be using Helsinki-NLP/opus-mt-mul-en · Hugging Face model and fine-tune it on more generalized Yoruba text provided by Artificial Intelligence for Development-Africa Network (ai4d.ai).

The model is publicly available at Hugging face and it's quite easy to download and train using the transformers library. We willuse GPU and add .to(cuda) to activate the GPU on this model.

- Source group: Multiple languages

- Target group: English

- OPUS readme: mul-eng

- Model: transformer

If you have a good internet connection, it won't take much time to download and load the model

# using pretrained model and then finetunig it on our dataset

tokenizer = AutoTokenizer.from_pretrained("Helsinki-NLP/opus-mt-mul-en")

model = AutoModelForSeq2SeqLM.from_pretrained("Helsinki-NLP/opus-mt-mul-en").to('cuda')

____________

Downloading: 100% 1.15k/1.15k [00:00<00:00, 23.2kB/s]

Downloading: 100% 707k/707k [00:00<00:00, 1.03MB/s]

Downloading: 100% 791k/791k [00:00<00:00, 1.05MB/s]

Downloading: 100% 1.42M/1.42M [00:00<00:00, 2.00MB/s]

Downloading: 100% 44.0/44.0 [00:00<00:00, 1.56kB/s]

Downloading: 100% 310M/310M [00:27<00:00, 12.8MB/s]

Preparing Model for Training

Optimizer

We will be using the AdamW optimizer to make our model converge faster and provide better results with a 0.0001 learning rate. I have experimented with different optimizers available at PyTorch library and AdamW works better for this problem. By doing hyperparameter tuning, I got the best result at a 0.0001 learning rate.

optimizer = torch.optim.AdamW(model.parameters(),lr=0.0001)

I have used the ekshusingh technique for fine-tuning the Helsinki NLP model. It's fast to train, requiring few samples to produce better results.

I have done some hyperparameter tuning and got the final parameters; 27 epochs and 32 batch sizes.

The model_train() function first divides the batch into local_X and local_y. We use the prepare_seq2seq_batch function from the tokenizer to convert text into tokens which can be used as input for our model. Then we use gradient descent to reduce the losses and print the final loss.

def model_train():

model.train()

losses = 0

X = df['Yoruba']

y = df['English']

max_epochs = 27

n_batches = 32

for epoch in tqdm(range(max_epochs)):

for i in tqdm(range(125)):

# making batches

local_X, local_y = X[i*n_batches:(i+1)*n_batches,], y[i*n_batches:(i+1)*n_batches,]

# preparing the data according to the model input

batch = tokenizer.prepare_seq2seq_batch(list(local_X),list(local_y),return_tensors='pt').to('cuda')

output = model(**batch)

# loss can be taken directly from the model output

loss = output.loss

optimizer.zero_grad()

loss.backward()

optimizer.step()

losses = losses+loss

average = losses/len(df)

print('Loss: ' + str(average) )

return model

Training

The training of a model took 30 minutes with 27 epochs, and each epoch takes approximately 38 seconds to run. The final loss is 0.0755, which is quite good and evidence that our model performed well, but we still need to check it on the evaluation metric.

if Train:

model = model_train()

100% 125/125 [00:38<00:00, 3.84it/s]

Loss: tensor(0.0755, device='cuda:0', grad_fn=<DivBackward0>)

Testing model

Testing on a single sample from the test dataset.

a = model.generate(**tokenizer.prepare_seq2seq_batch('Nínú ìpè kan lẹ́yìn ìgbà náà, wọ́n sọ fún aṣojú iléeṣẹ́ BlaBlaCar pé ètò náà ti yí padà, pé',return_tensors='pt').to('cuda'))

text = tokenizer.batch_decode(a)

The model performed quite well, and the sentence makes sense. We need to remove brackets and <pad> along with other unnecessary punctuation marks to further clean our dataset.

text

['<pad> In a statement after that hearing, the BualaCard’s representative was told that the event had changed, that he had turned up.']

Using string sub and replace, we have removed <pad>,', [,] from our text.

text = str(text)

text = re.sub("<pad> ","",text)

text = re.sub("'","",text)

text = text.replace("[", "")

text = text.replace("]", "")

The final text looks clean and its almost perfect for our initial evaluation.

text

'In a statement after that hearing, the BualaCard’s representative was told that the event had changed, that he had turned up.'

Prediction on the test dataset

Let's predict from the test dataset and check how well our model performed. We will be loading the test dataset you can access from the Zindi platform.

test = pd.read_csv(data_path + "Test.csv")

Generating prediction and batch decoding the tensor into text. Using .progress_apply, we have translated all the Yoruba text from the test dataset and moved it to a new column named Label.

test["Label"] = ""

test["Label"]=test["Yoruba"].progress_apply(lambda x:tokenizer.batch_decode(model.generate(**tokenizer.prepare_seq2seq_batch([x],return_tensors='pt').to('cuda'))))

0%| | 0/6816 [00:00<?, ?it/s]

Cleaning our predicted translation.

test['Label'] = test['Label'].astype(str)

test['Label'] = test['Label'].apply(lambda x: re.sub("<pad> ","",x))

test['Label'] = test['Label'].apply(lambda x: re.sub("'","",x))

test['Label'] = test['Label'].apply(lambda x: x.replace("[", ""))

test['Label'] = test['Label'].apply(lambda x: x.replace("]", ""))

test['Label'] = test['Label'].apply(lambda x: x.replace('"', ""))

You can see the final version of our test dataset and it's quite accurate. To check how our model performed on the test dataset, we will try to upload the file on the Zindi Platform.

test.head()

Metric

The competition is using Rouge Score metric, and the higher the score, the better your model performs. The leaderboard score of our model is 0.3025 which is not bad, and it will get you into the top 20.

Conclusion

Using the power of transfer learning, we have created a model that performed quite well on generalized Yoruba text. The Helsinki NLP machine translation multilanguage model also performed quite well. I have experimented with many publicly available models, but HugginFace and opus-mt-mul-en by far perform the best on our low resource language. Due to Google Translate, machine translation research has seen a downfall, because Google Translate does not provide translation for low resource languages, so fine-tuning your machine translation model provides you with freedom to translate any low resource language using transformers. In the end, this was my starter code, and with multiple experiments and preprocessing the text, I got 14th rank in the competition with a final Rogue Score of 0.35168.

You can find my model on kingabzpro/Helsinki-NLP-opus-yor-mul-en · Hugging Face

About the author

Abid Ali Awan is a certified data scientist who loves building machine learning models and blogging about the latest AI technologies. He is currently testing AI products at PEC-PIT. He has recently participated in 60+ competitions, ranging from data analytics to machine learning, which his creativity in dealing with challenges can be credited to. You can reach him on LinkedIn.

Read the original article here.