MPEG-G Microbiome Classification Challenge

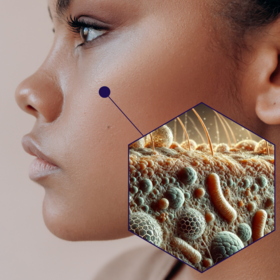

Your body is home to communities of trillions of microbes—tiny organisms that play a massive role in health and disease. These microbial communities vary dramatically depending on where they live in the body. The gut, mouth, skin, and nasal passages each host a unique mix of bacteria, viruses, fungi, and archaea. Scientists collect metagenomic data from these sites to understand how our microbiomes impact immunity, infection, and inflammation.

In this challenge, we’re going one step further by asking: can we use machine learning to predict where in the body a microbiome sample comes from, based solely on metagenomic data?

Your goal is to build a machine learning model that classifies a microbiome sample’s body site—stool, oral, skin, or nasal—based on its 16S rRNA gene sequence profile encoded in MPEG-G format, along with health status tags, and participant metadata. Briefly, 16s rRNA is used to identify and classify bacteria by sequencing a specific region of the 16S ribosomal RNA gene and aligning it to a database, which is highly conserved among bacteria, allowing for taxonomic classification.

This is a multi-modal classification problem that pushes the boundaries of microbiome data science—especially because the data is stored and accessed using a next-generation compression standard.

Building predictive power in microbiome metagenomics has far-reaching implications for human health and wellbeing, by linking microbiome profiles to patient conditions and treatment plans, and improving our understanding of how the microbiome and the immune system interact in various parts of the body.

This challenge will also promote the uptake and use of MPEG-G, a state-of-the-art bioinformatics file format optimised for machine learning applications.

Royal Philips (NYSE: PHG, AEX: PHIA) is a leading health technology company focused on improving people's health and well-being through meaningful innovation. Philips’ patient- and people-centric innovation leverages advanced technology and deep clinical and consumer insights to deliver personal health solutions for consumers and professional health solutions for healthcare providers and their patients in the hospital and the home. Headquartered in the Netherlands, the company is a leader in diagnostic imaging, ultrasound, image-guided therapy, monitoring, and enterprise informatics, as well as in personal health. Philips generated 2024 sales of EUR 18 billion and employs approximately 67,200 employees with sales and services in more than 100 countries.

A leader in the biomedical revolution, Stanford Medicine has a long tradition of leadership in pioneering research, creative teaching protocols and effective clinical therapies.

Cima is a non-profit biomedical research center founded in 2004, after more than 50 years of scientific experience at the University of Navarra. We conduct innovative and translational research of excellence in three major divisions of knowledge: Cancer, DNA and RNA Medicine and Technological Innovation.

Our mission is to foster knowledge and innovate novel therapies through outstanding preclinical biomedical research, all directed towards enhancing the health of patients and with far-reaching impact across society. Cima aspires to become a leading center for translation of advanced genetic medicine.

With a profound translational orientation, Cima closes the loop between academia and industry by facilitating the transformation of its most promising research results into new drugs that benefit patients.

Fudan University, Intelligent Medicine Institute

Established under the Fudan Intelligent Medicine Institute, the Fudan Center for Phenomic and Precision Medicine (FCPPM) integrates cutting-edge technologies and clinical resources to advance precision medicine. By combining multi-omics data (genomics, metabolomics, proteomics, and single-cell omics) with AI-driven analytics, the center focuses on biomarker discovery and disease mechanism research, particularly in metabolic disorders, aging, and complex diseases.

FCPPM bridges clinical practice and research through its Visiting Scholar Program, enabling physicians to participate in translational projects. The center maintains global collaborations with leading institutions like Stanford and Oxford, supported by an International Advisory Committee.

To accelerate real-world impact, FCPPM offers a Fast-Track Translation Pipeline with industry partnerships and develops AI-enhanced bioinformatics training programs. Through interdisciplinary innovation, the center aims to deliver transformative "Fudan Solutions" in precision medicine.

The spirit of Leibniz – as one of the nine leading universities of technology in Germany, Leibniz University Hannover is committed to seeking sustainable, peaceful and responsible solutions to the key issues of tomorrow. Our ability to do this stems from our broad spectrum of subjects, which range from engineering and the natural sciences to architecture and environmental planning, and from law and economics to the social sciences and humanities.

Viome is a longevity and preventive healthcare company committed to bridging the gap between scientific breakthroughs and their practical implementation as health solutions. Utilizing cutting-edge AI and the world's largest metatranscriptome database from gut, oral microbiomes and human, Viome's home-based tests offer individuals personalized health insights, nutritional guidance, and innovative products to enhance healthspan.

Join us on Thursday, 10 July at 13:00 GMT. Sign up here --> Introduction to the MPEG-G Microbiome Classification Challenge

Join us on Thursday, 31 July at 13:00 GMT. Sign up here --> Exploring the Microbiome: Introduction to the MPEG-G Decoding the Dialogue Challenge

Federated Learning (FL) is a machine learning approach where multiple devices or data sources (called clients) collaboratively train a model without sharing their raw data. Instead, each client trains a model locally and only shares model updates (like gradients or weights) with a central server, which aggregates them into a global model.

For this challenge, you are provided with the data in two formats:

- Centralised format: A single folder containing all the samples. This should be used to train your centralised model.

- Federated format: Multiple folders, each representing different body sites (e.g., stool, oral, skin, nasal). These are intended for use in your federated learning solution.

You may use the provided folders as-is or re-group them logically (e.g., by participant) to simulate different clients in your FL setup.

Note, the data in the main folders and sub-folders are the same.

Your solution must include:

- A centralised model, trained using the combined dataset.

- A federated learning approach, trained using the split datasets.

The aim is to prove that MPEG-G files are more efficient due to less data movement and better compression, and that federated learning preserves performance across sites better than a centralised model. You must:

- Define Clients: Treat each folder (or grouping by participants/site) as a "location".

- Create a Federated Dataset Loader: Each client should have its own dataset split (e.g., location1_data, location2_data, ...) and convert each into a PyTorch Dataset.

- Define the Same PyTorch Model: Same architecture as centralized model.

- Implement FL with Flower or PySyft

- Train the FL Model

- Make a submission to Zindi

In your final solution submission , you will need to output three submission files, one from your centralised model, one from your federated learning model and a technical explanation of the innovation and approach used. You will also need to indicate training time, inference time, resources used and any observations on .mgb vs uncompressed formats.

While the challenge runs you can submit predictions from your centralised model or federated model. However, for code review you will need to submit all three files as mentioned above.

MPEG-G stands for Moving Picture Experts Group – Genomic Information Representation. It’s an ISO/IEC standard designed for efficient compression, transport, and processing of genomic and metagenomic data.

Why it matters:

- Traditional genomic datasets are massive, often hundreds of gigabytes per sample. MPEG-G reduces this size significantly—sometimes more than 10x—without losing critical information.

- It supports selective access to regions, samples, or types of data (e.g., only reads containing variants), which means faster, more efficient ML pipelines.

- It also includes metadata encoding, encryption, and streaming capabilities, enabling new forms of genomic data sharing and analysis at scale.

In this challenge, you’ll be working with microbiome data stored in this format—just like researchers and clinicians will in the future.

This challenge is a two-phase evaluation; the first is based on your leaderboard, followed by a rubric evaluation for the top 20 on the leaderboard.

Phase One: Leaderboard Evaluation

This challenge has an associated leaderboard where submissions are ranked using top Log Loss.

Note the Filename does not contain a suffix such as mgb.

Your submission file could look something like this:

filename Mouth Nasal Skin Stool

ID_TVCGNY 0.11 0.95 0.23 0.40

Phase Two: Rubric Evaluation

The top 20 users on the leaderboard in Phase One, based on their log loss score, will be scored using the following rubric.

Metric Weight

Private Leaderboard Score based on a federated learning approach. 40%

Private Leaderboard Score based on a centralised model approach. 40%

Carbon Emissions score. 20%

🥇 1st Place — Tiny Margins, Big Margins - https://github.com/JuliusFx131/cyclic-federated-learning

🥈 2nd Place — Kmers - https://github.com/koleshjr/MPEG-G-Microbiome-Classification-Challenge

🥉 3rd Place — Ever_learners: https://github.com/codejoetheduke/3rd-place-solution-mpeg-g-classification

1st Prize: $2 500 USD

2nd Prize: $1 500 USD

3rd prize: $1 000 USD

Winners will be introduced to the research team to possibly copublish a paper on their solution.

There are 7 000 Zindi points available. You can read more about Zindi points here.

- Languages and tools: You may only use open-source languages and tools in building models for this challenge.

- Who can compete: This challenge is open to all.

- Submission Limits: 10 submissions per day, 300 submissions overall.

- Team size: Max team size of 4

- Public-Private Split: Zindi maintains a public leaderboard and a private leaderboard for each challenge. The Public Leaderboard includes approximately 30% of the test dataset. The private leaderboard will be revealed at the close of the challenge and contains the remaining 70% of the test set.

- Data Sharing: CC-BY 1.0 license

- Platform abuse: Multiple accounts, or sharing of code and information across accounts not in teams, or any other forms of platform abuse are not allowed, and will lead to disqualification.

- Code Review: Top 10 on the private leaderboard will receive an email requesting their code at the close of the challenge. You will have 48 hours to submit your code.

- Solutions of value: Solutions handed over to clients must be of value to the client, regardless of leaderboard ranking.

ENTRY INTO THIS CHALLENGE CONSTITUTES YOUR ACCEPTANCE OF THESE OFFICIAL CHALLENGE RULES.

Full Challenge Rules

This challenge is open to all.

Teams and collaboration

You may participate in challenges as an individual or in a team of up to four people. When creating a team, the team must have a total submission count less than or equal to the maximum allowable submissions as of the formation date. A team will be allowed the maximum number of submissions for the challenge, minus the total number of submissions among team members at team formation. Prizes are transferred only to the individual players or to the team leader.

Multiple accounts per user are not permitted, and neither is collaboration or membership across multiple teams. Individuals and their submissions originating from multiple accounts will be immediately disqualified from the platform.

Code must not be shared privately outside of a team. Any code that is shared, must be made available to all challenge participants through the platform. (i.e. on the discussion boards).

The Zindi data scientist who sets up a team is the default Team Leader but they can transfer leadership to another data scientist on the team. The Team Leader can invite other data scientists to their team. Invited data scientists can accept or reject invitations. Until a second data scientist accepts an invitation to join a team, the data scientist who initiated a team remains an individual on the leaderboard. No additional members may be added to teams within the final 5 days of the challenge or last hour of a hackathon.

The team leader can initiate a merge with another team. Only the team leader of the second team can accept the invite. The default team leader is the leader from the team who initiated the invite. Teams can only merge if the total number of members is less than or equal to the maximum team size of the challenge.

A team can be disbanded if it has not yet made a submission. Once a submission is made individual members cannot leave the team.

All members in the team receive points associated with their ranking in the challenge and there is no split or division of the points between team members.

Datasets and packages

The solution must use publicly-available, open-source packages only.

You may use only the datasets provided for this challenge. Automated machine learning tools such as automl are not permitted.

You may use pretrained models as long as they are openly available to everyone.

You are allowed to access, use and share challenge data for any commercial,. non-commercial, research or education purposes, under a CC-BY 1.0 license.

You must notify Zindi immediately upon learning of any unauthorised transmission of or unauthorised access to the challenge data, and work with Zindi to rectify any unauthorised transmission or access.

Your solution must not infringe the rights of any third party and you must be legally entitled to assign ownership of all rights of copyright in and to the winning solution code to Zindi.

Submissions and winning

You may make a maximum of 10 submissions per day.

You may make a maximum of 300 submissions for this challenge.

Before the end of the challenge you need to choose 2 submissions to be judged on for the private leaderboard. If you do not make a selection your 2 best public leaderboard submissions will be used to score on the private leaderboard.

Submissions that include rounding or threshold setting will be excluded from winning.

During the challenge, your best public score will be displayed regardless of the submissions you have selected. When the challenge closes your best private score out of the 2 selected submissions will be displayed.

Zindi maintains a public leaderboard and a private leaderboard for each challenge. The Public Leaderboard includes approximately 30% of the test dataset. While the challenge is open, the Public Leaderboard will rank the submitted solutions by the accuracy score they achieve. Upon close of the challenge, the Private Leaderboard, which covers the other 70% of the test dataset, will be made public and will constitute the final ranking for the challenge.

Note that to count, your submission must first pass processing. If your submission fails during the processing step, it will not be counted and not receive a score; nor will it count against your daily submission limit. If you encounter problems with your submission file, your best course of action is to ask for advice on the Competition’s discussion forum.

If you are in the top 10 at the time the leaderboard closes, we will email you to request your code. On receipt of email, you will have 48 hours to respond and submit your code following the Reproducibility of submitted code guidelines detailed below. Failure to respond will result in disqualification.

If your solution places top ten on the final leaderboard, you will be required to submit your winning solution code to us for verification.

If two solutions earn identical scores on the leaderboard, the tiebreaker will be the date and time in which the submission was made (the earlier solution will win).

The winners will be paid via bank transfer, PayPal if payment is less than or equivalent to $100, or other international money transfer platform. International transfer fees will be deducted from the total prize amount, unless the prize money is under $500, in which case the international transfer fees will be covered by Zindi. In all cases, the winners are responsible for any other fees applied by their own bank or other institution for receiving the prize money. All taxes imposed on prizes are the sole responsibility of the winners. The top winners or team leaders will be required to present Zindi with proof of identification, proof of residence and a letter from your bank confirming your banking details. Winners will be paid in USD or the currency of the challenge. If your account cannot receive US Dollars or the currency of the challenge then your bank will need to provide proof of this and Zindi will try to accommodate this.

Payment will be made after code review and sealing the leaderboard.

You acknowledge and agree that Zindi may, without any obligation to do so, remove or disqualify an individual, team, or account if Zindi believes that such individual, team, or account is in violation of these rules. Entry into this challenge constitutes your acceptance of these official challenge rules.

Zindi is committed to providing solutions of value to our clients and partners. To this end, we reserve the right to disqualify your submission on the grounds of usability or value. This includes but is not limited to the use of data leaks or any other practices that we deem to compromise the inherent value of your solution.

Zindi also reserves the right to disqualify you and/or your submissions from any challenge if we believe that you violated the rules or violated the spirit of the challenge or the platform in any other way. The disqualifications are irrespective of your position on the leaderboard and completely at the discretion of Zindi.

Please refer to the FAQs and Terms of Use for additional rules that may apply to this challenge. We reserve the right to update these rules at any time.

Reproducibility of submitted code

- If your submitted code does not reproduce your score on the leaderboard, we reserve the right to adjust your rank to the score generated by the code you submitted.

- If your code does not run you will be dropped from the top 10. Please make sure your code runs before submitting your solution.

- Always set the seed. Rerunning your model should always place you at the same position on the leaderboard. When running your solution, if randomness shifts you down the leaderboard we reserve the right to adjust your rank to the closest score that your submission reproduces.

- Custom packages in your submission notebook will not be accepted.

- You may only use tools available to everyone i.e. no paid services or free trials that require a credit card.

Documentation

A README markdown file is required

It should cover:

- How to set up folders and where each file is saved

- Order in which to run code

- Explanations of features used

- Environment for the code to be run (conda environment.yml file or an environment.txt file)

- Hardware needed (e.g. Google Colab or the specifications of your local machine)

- Expected run time for each notebook. This will be useful to the review team for time and resource allocation.

Your code needs to run properly, code reviewers do not have time to debug code. If code does not run easily you will be bumped down the leaderboard.

Consequences of breaking any rules of the challenge or submission guidelines:

- First offence: No prizes for 6 months and 2000 points will be removed from your profile (probation period). If you are caught cheating, all individuals involved in cheating will be disqualified from the challenge(s) you were caught in and you will be disqualified from winning any challenges for the next six months and 2000 points will be removed from your profile. If you have less than 2000 points to your profile your points will be set to 0.

- Second offence: Banned from the platform. If you are caught for a second time your Zindi account will be disabled and you will be disqualified from winning any challenges or Zindi points using any other account.

- Teams with individuals who are caught cheating will not be eligible to win prizes or points in the challenge in which the cheating occurred, regardless of the individuals’ knowledge of or participation in the offence.

- Teams with individuals who have previously committed an offence will not be eligible for any prizes for any challenges during the 6-month probation period.

Monitoring of submissions

- We will review the top 10 solutions of every challenge when the challenge ends.

- We reserve the right to request code from any user at any time during a challenge. You will have 24 hours to submit your code following the rules for code review (see above). Zindi reserves the right not to explain our reasons for requesting code. If you do not submit your code within 24 hours you will be disqualified from winning any challenges or Zindi points for the next six months. If you fall under suspicion again and your code is requested and you fail to submit your code within 24 hours, your Zindi account will be disabled and you will be disqualified from winning any challenges or Zindi points with any other account.

Your GPU and training time restrictions are outlined below:

GPU VRAM: Maximum allowed is 32GB (for example, a V100 as available on Colab Pro+).

Training Time:

- Each individual model training (1x train/validation) run must not exceed 12 hours.

- Total training time (across all models/runs) must not exceed 24 hours.

We recommend using Google Colab or your local machine to work on this challenge.

Join the largest network for

data scientists and AI builders