Tuning Meta LLMs for African Language Machine Translation

Despite the growing global reliance on LLMs for a wide variety of online tasks including machine translation, many African communities and languages are not able to benefit from these technological advances. LLMs work primarily in English, and the resources required to train and run an LLM make them inaccessible to many in the developing world, especially in Africa.

In this challenge, your task is to build or improve on an existing machine translation model that can translate English sentences into Twi. The dataset is a English-Twi parallel corpus developed by GhanaNLP. You are encouraged to use one of the Meta suite of language models such as Llama, SeamlessM4T, or NLLB, or any branch thereof. You will be able to draw on computational resources via Colab, provided specifically for this challenge.

By enabling accurate and efficient translation between English and Twi, the solutions developed in this challenge will enhance access to digital content and services for Twi speakers. This is particularly relevant in areas such as education, where students can gain better access to learning materials, and in healthcare, where accurate translation can facilitate better patient outcomes by ensuring clear communication between healthcare providers and Twi-speaking patients.

Moreover, the use of open-source models, particularly those from Meta’s suite, ensures that the advancements made in this challenge are accessible and can be built upon by the broader community.

NB: Winning solutions must use open-source models from the Meta repository on Hugging Face, including, but not limited to, NLLB 200, SeamlessM4T, as well as any derivatives of these models.

About Meta (meta.com)

When Facebook launched in 2004, it changed the way people connect. Apps like Messenger, Instagram and WhatsApp further empowered billions around the world. Now, Meta is moving beyond 2D screens toward immersive experiences like augmented, virtual and mixed reality to help build the next evolution in social technology.

At Meta, we’re building innovative new ways to help people feel closer to each other, and the makeup of our company reflects the diverse perspectives of the people who use our technologies.

About NLPGhana (ghananlp.org)

NLPGhana (also know as GhanaNLP) is an Open Source Movement of like-minded volunteers whose time and skills are dedicated to building an ecosystem of :

- Open-source datasets

- Open source computational methods

- An army of NLP researchers, scientists and practitioners

To revolutionize and improve every aspect of Ghanaian and African life through the powerful tool of Natural Language Processing.

The error metric for this competition is Rouge Score, ROUGE-N (N-gram) scoring (Rouge1), reporting the F-measure.

The Recall-Oriented Understudy for Gisting Evaluation (ROUGE) scoring algorithm calculates the similarity between a candidate document and a collection of reference documents. Use the ROUGE score to evaluate the quality of document translation and summarization models [ref].

For every row in the dataset, submission files should contain 2 columns: ID and translation.

Your submission file should look like this:

ID Target ID_AAAAhgRX Mpɛn pii no nso nneɛma... ID_AAGuzGzi Nnipa a wɔwɔ ...

To ensure a smooth experience, we’ve set up custom Google Compute Engine (GCE) VMs that you’ll use for your sessions on Google Colab.

Specs

- 8 vCPUs, 4 cores, 32 GB RAM

- 1 NVIDIA L4 GPU

- 200 GB SSD Disk

If you qualify you will receive an email with your unique instance and further instructions on how to connect on 3 September at 16:00 PM.

Read through this "How To Guide" in preparation.

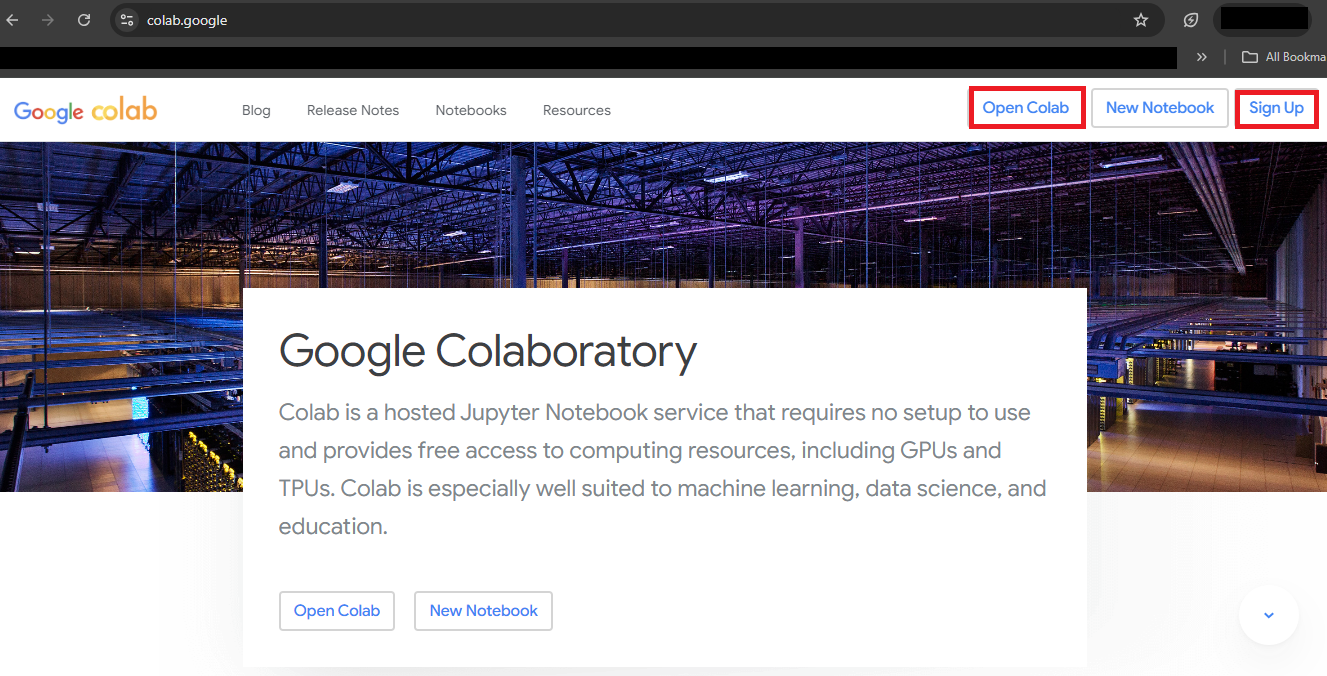

Step 1: Log in to Google Colab

Open your web browser and navigate to Google Colab. Click on Sign-Up if you don’t have an account where you’ll be directed to a page that you can sign up. If you do have an account, simply click on Open Colab.

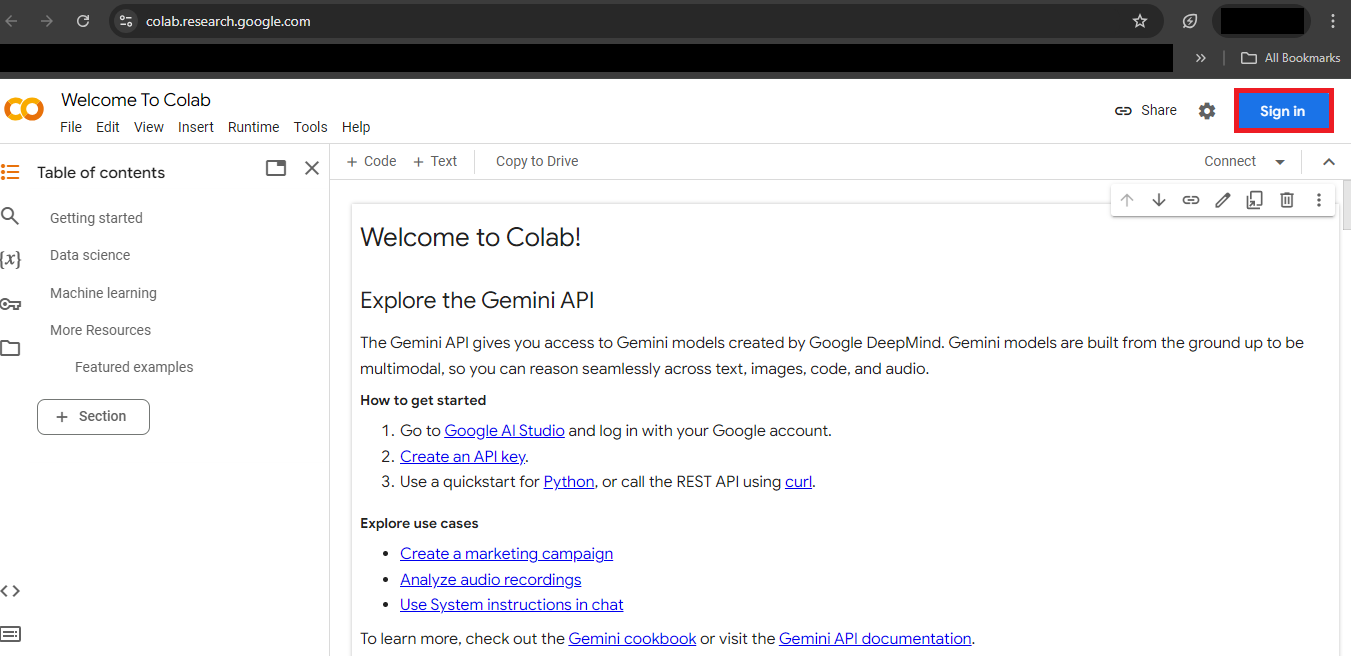

Click on Sign in in the top-right corner.

Use your personal Google email address to log in.

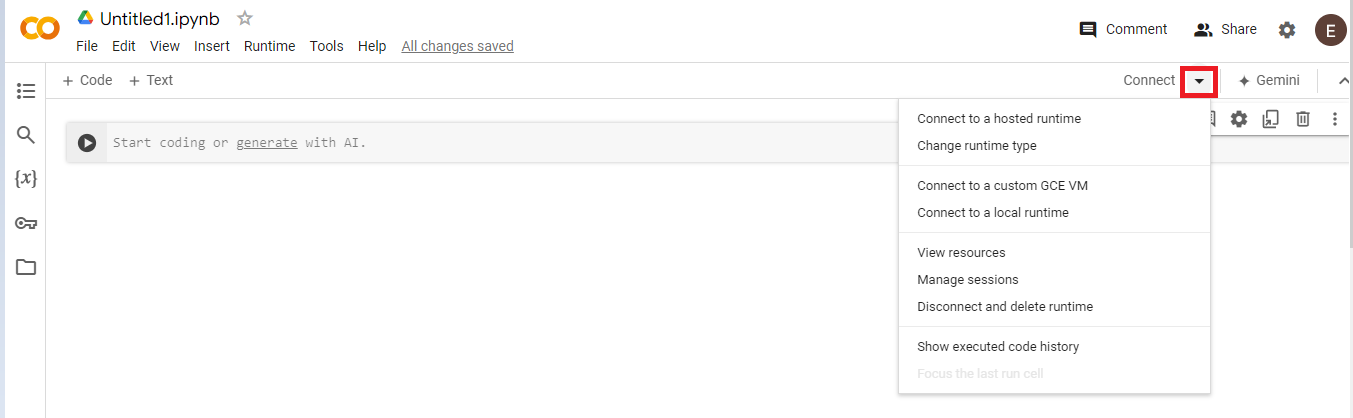

2. Connect to Your Custom GCE VM

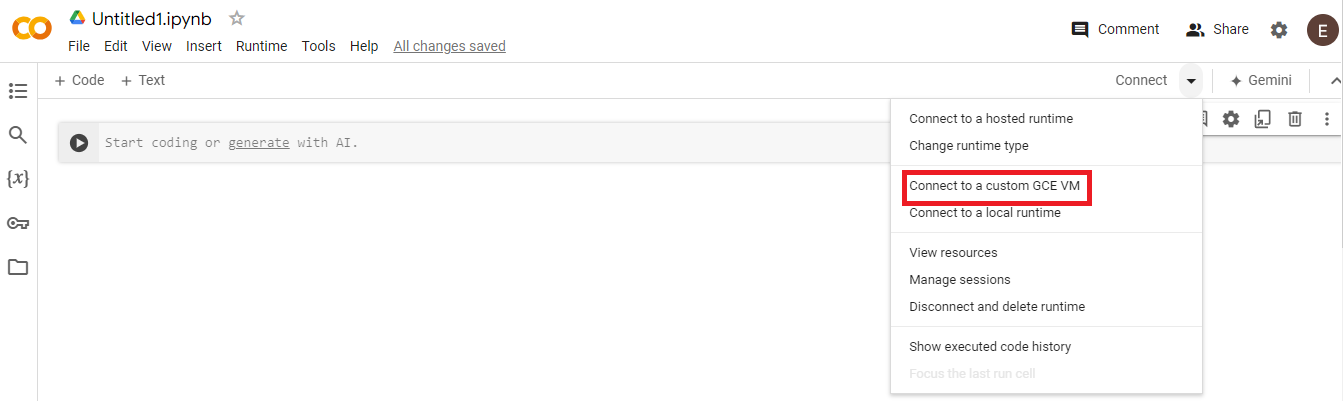

After logging into Google Colab, go to the top-right corner where the "Connect" button is located. Click on the dropdown arrow next to it, as shown below.

Click on Connect to a custom GCE VM

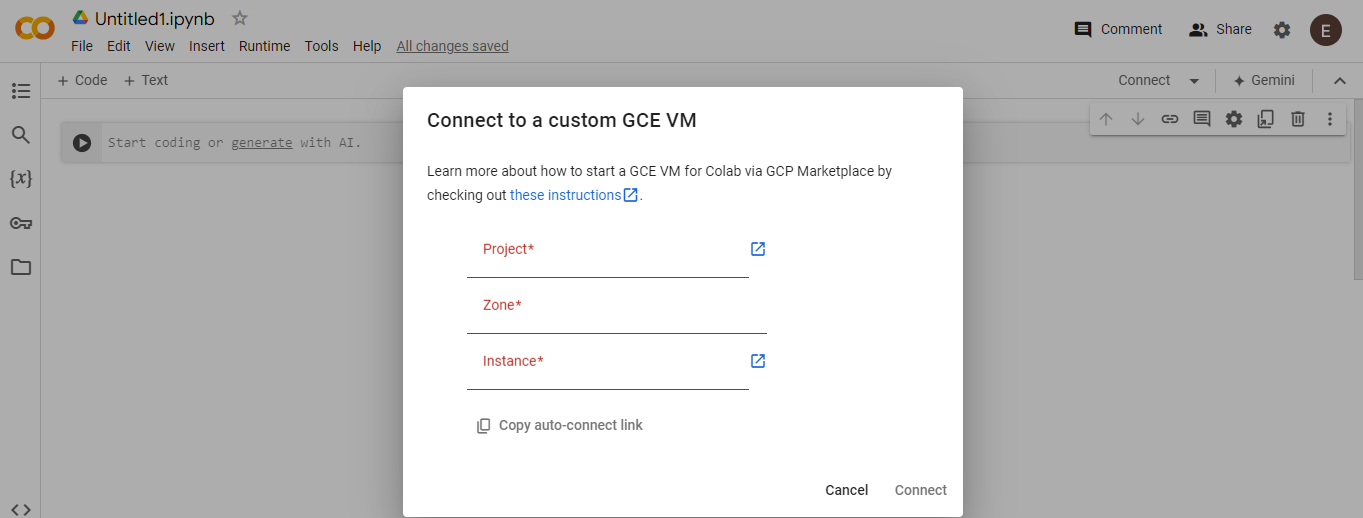

Use the credentials provided to connect to your assigned Google Compute Engine Virtual Machine by entering the details in the Project, Zone, and Instance fields.

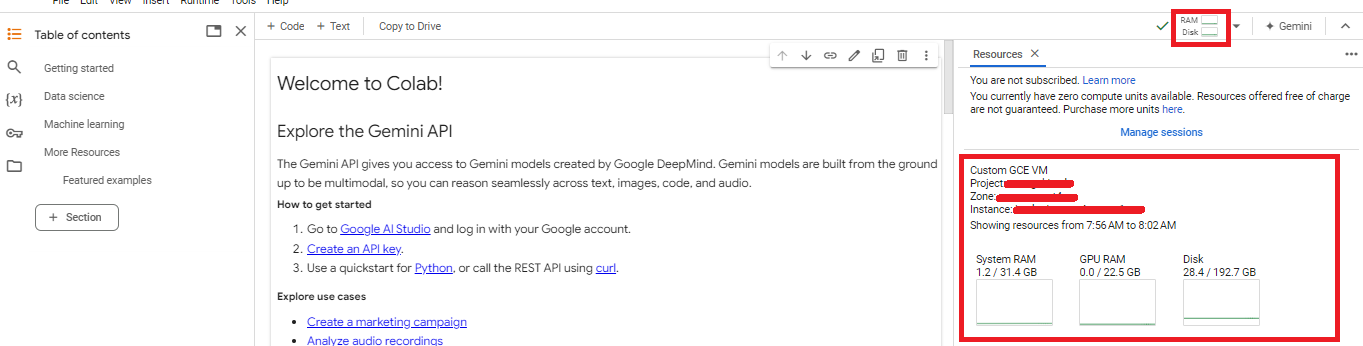

Once connected to the GCE VM, you'll see a RAM and DISK icon section with a green checkmark to its left. Click on this section to open a pop-up, as shown below, which will display the details of your GCE VM connection.

Happy Coding! 🤗

All VMs will be terminated at 6 September 14:00 PM.

Your solution must train and infer in a maximum of 8 hours with the below maximum specs.

- 8 vCPUs, 4 cores, 32 GB RAM

- 1 NVIDIA L4 GPU

- 200 GB SSD Disk

1st winner: $2 500 USD

2nd winner: $1 500 USD

3rd winner: $1 000 USD

There are 500 Zindi points available. You can read more about Zindi points here.

Prizes will only be awarded to registered attendees of Deep Learning Indaba Senegal 2024.

NB: Winning solutions must use open-source models from the Meta repository on Hugging Face, including, but not limited to, NLLB 200, SeamlessM4T, as well as any derivatives of these models.

If you are in the top 10 of the private leaderboard when the competition ends you will receive an email at the close of the competition requesting you to submit your code for verification. Code needs to be submitted by 6 September 2024 at 18:00.

You will need to submit:

- Username or Team Name

- Public Leaderboard Rank

- Picture of your DLI name badge

- GPU used, if you used one

- Time taken to run full solution

- ReadMe file.

- CSV submission file. This needs to be the submission file that corresponds to your score on the leaderboard.

- Requirements file, only include the libraries you used in your solutions.

- Solution, if it is more than one file, please upload it as a .ZIP.

This challenge starts on 2 September 17:00 PM.

The first 100 teams created will qualify for a GPU.

You need to form a team by 3 September 13:00 PM.

If you qualify you will receive an email with instructions on how to connect on 3 September at 16:00 PM.

Competition closes on 6 September 14:00 PM.

The private leaderboard will be revealed on 7 September 14:00 PM.

We reserve the right to update the contest timeline if necessary.

How to enroll in your first Zindi competition

How to create a team on Zindi

How to update your profile on Zindi

How to use Colab on Zindi

How to mount a drive on Colab

- Languages and tools: You may only use open-source languages and tools in building models for this challenge.

- Who can compete: Indaba Senegal 2024 participants ONLY.

- Submission Limits: 50 submissions per day, 100 submissions overall.

- Team size: Max team size of 4

- Public-Private Split: Zindi maintains a public leaderboard and a private leaderboard for each challenge. The Public Leaderboard includes approximately 30% of the test dataset. The private leaderboard will be revealed at the close of the challenge and contains the remaining 70% of the test set.

- Data Sharing: Apache License 2.0

- Platform abuse: Multiple accounts, or sharing of code and information across accounts not in teams, or any other forms of platform abuse are not allowed, and will lead to disqualification.

- Code Review: Top 5 on the private leaderboard will receive an email requesting their code at the close of the challenge. You will have 1 hours to submit your code.

ENTRY INTO THIS CHALLENGE CONSTITUTES YOUR ACCEPTANCE OF THESE OFFICIAL CHALLENGE RULES.

Full Challenge Rules

This challenge is open Indaba Senegal 2024 participants ONLY.

Teams and collaboration

You may participate in challenges as an individual or in a team of up to four people. When creating a team, the team must have a total submission count less than or equal to the maximum allowable submissions as of the formation date. A team will be allowed the maximum number of submissions for the challenge, minus the total number of submissions among team members at team formation. Prizes are transferred only to the individual players or to the team leader.

Multiple accounts per user are not permitted, and neither is collaboration or membership across multiple teams. Individuals and their submissions originating from multiple accounts will be immediately disqualified from the platform.

Code must not be shared privately outside of a team. Any code that is shared, must be made available to all challenge participants through the platform. (i.e. on the discussion boards).

The Zindi data scientist who sets up a team is the default Team Leader but they can transfer leadership to another data scientist on the team. The Team Leader can invite other data scientists to their team. Invited data scientists can accept or reject invitations. Until a second data scientist accepts an invitation to join a team, the data scientist who initiated a team remains an individual on the leaderboard. No additional members may be added to teams within the final 5 days of the challenge or last hour of a hackathon.

The team leader can initiate a merge with another team. Only the team leader of the second team can accept the invite. The default team leader is the leader from the team who initiated the invite. Teams can only merge if the total number of members is less than or equal to the maximum team size of the challenge.

A team can be disbanded if it has not yet made a submission. Once a submission is made individual members cannot leave the team.

All members in the team receive points associated with their ranking in the challenge and there is no split or division of the points between team members.

Datasets and packages

The solution must use publicly-available, open-source packages only.

You may use only the datasets provided for this challenge. Automated machine learning tools such as automl are not permitted.

You may use pretrained models as long as they are openly available to everyone.

You are allowed to access this data by the license Apache License 2.0.

You must notify Zindi immediately upon learning of any unauthorised transmission of or unauthorised access to the challenge data, and work with Zindi to rectify any unauthorised transmission or access.

Your solution must not infringe the rights of any third party and you must be legally entitled to assign ownership of all rights of copyright in and to the winning solution code to Zindi.

Submissions and winning

You may make a maximum of 50 submissions per day.

You may make a maximum of 100 submissions for this challenge.

Before the end of the challenge you need to choose 2 submissions to be judged on for the private leaderboard. If you do not make a selection your 2 best public leaderboard submissions will be used to score on the private leaderboard.

During the challenge, your best public score will be displayed regardless of the submissions you have selected. When the challenge closes your best private score out of the 2 selected submissions will be displayed.

Zindi maintains a public leaderboard and a private leaderboard for each challenge. The Public Leaderboard includes approximately 30% of the test dataset. While the challenge is open, the Public Leaderboard will rank the submitted solutions by the accuracy score they achieve. Upon close of the challenge, the Private Leaderboard, which covers the other 70% of the test dataset, will be made public and will constitute the final ranking for the challenge.

Note that to count, your submission must first pass processing. If your submission fails during the processing step, it will not be counted and not receive a score; nor will it count against your daily submission limit. If you encounter problems with your submission file, your best course of action is to ask for advice on the Competition’s discussion forum.

If you are in the top 5 at the time the leaderboard closes, we will email you to request your code. On receipt of email, you will have 1 hour to respond and submit your code following the Reproducibility of submitted code guidelines detailed below. Failure to respond will result in disqualification.

If your solution places 1st, 2nd, or 3rd on the final leaderboard, you will be required to submit your winning solution code to us for verification.

If two solutions earn identical scores on the leaderboard, the tiebreaker will be the date and time in which the submission was made (the earlier solution will win).

The winners will be paid via bank transfer, PayPal if payment is less than or equivalent to $100, or other international money transfer platform. International transfer fees will be deducted from the total prize amount, unless the prize money is under $500, in which case the international transfer fees will be covered by Zindi. In all cases, the winners are responsible for any other fees applied by their own bank or other institution for receiving the prize money. All taxes imposed on prizes are the sole responsibility of the winners. The top winners or team leaders will be required to present Zindi with proof of identification, proof of residence and a letter from your bank confirming your banking details. Winners will be paid in USD or the currency of the challenge. If your account cannot receive US Dollars or the currency of the challenge then your bank will need to provide proof of this and Zindi will try to accommodate this.

Please note that due to the ongoing Russia-Ukraine conflict, we are not currently able to make prize payments to winners located in Russia. We apologise for any inconvenience that may cause, and will handle any issues that arise on a case-by-case basis.

Payment will be made after code review and sealing the leaderboard.

You acknowledge and agree that Zindi may, without any obligation to do so, remove or disqualify an individual, team, or account if Zindi believes that such individual, team, or account is in violation of these rules. Entry into this challenge constitutes your acceptance of these official challenge rules.

Zindi is committed to providing solutions of value to our clients and partners. To this end, we reserve the right to disqualify your submission on the grounds of usability or value. This includes but is not limited to the use of data leaks or any other practices that we deem to compromise the inherent value of your solution.

Zindi also reserves the right to disqualify you and/or your submissions from any challenge if we believe that you violated the rules or violated the spirit of the challenge or the platform in any other way. The disqualifications are irrespective of your position on the leaderboard and completely at the discretion of Zindi.

Please refer to the FAQs and Terms of Use for additional rules that may apply to this challenge. We reserve the right to update these rules at any time.

Reproducibility of submitted code

- If your submitted code does not reproduce your score on the leaderboard, we reserve the right to adjust your rank to the score generated by the code you submitted.

- If your code does not run you will be dropped from the top 10. Please make sure your code runs before submitting your solution.

- Always set the seed. Rerunning your model should always place you at the same position on the leaderboard. When running your solution, if randomness shifts you down the leaderboard we reserve the right to adjust your rank to the closest score that your submission reproduces.

- Custom packages in your submission notebook will not be accepted.

- You may only use tools available to everyone i.e. no paid services or free trials that require a credit card.

Documentation

A README markdown file is required

It should cover:

- How to set up folders and where each file is saved

- Order in which to run code

- Explanations of features used

- Environment for the code to be run (conda environment.yml file or an environment.txt file)

- Hardware needed (e.g. Google Colab or the specifications of your local machine)

- Expected run time for each notebook. This will be useful to the review team for time and resource allocation.

Your code needs to run properly, code reviewers do not have time to debug code. If code does not run easily you will be bumped down the leaderboard.

Consequences of breaking any rules of the challenge or submission guidelines:

- First offence: No prizes for 6 months and 2000 points will be removed from your profile (probation period). If you are caught cheating, all individuals involved in cheating will be disqualified from the challenge(s) you were caught in and you will be disqualified from winning any challenges for the next six months and 2000 points will be removed from your profile. If you have less than 2000 points to your profile your points will be set to 0.

- Second offence: Banned from the platform. If you are caught for a second time your Zindi account will be disabled and you will be disqualified from winning any challenges or Zindi points using any other account.

- Teams with individuals who are caught cheating will not be eligible to win prizes or points in the challenge in which the cheating occurred, regardless of the individuals’ knowledge of or participation in the offence.

- Teams with individuals who have previously committed an offence will not be eligible for any prizes for any challenges during the 6-month probation period.

Monitoring of submissions

- We will review the top 10 solutions of every challenge when the challenge ends.

- We reserve the right to request code from any user at any time during a challenge. You will have 24 hours to submit your code following the rules for code review (see above). Zindi reserves the right not to explain our reasons for requesting code. If you do not submit your code within 24 hours you will be disqualified from winning any challenges or Zindi points for the next six months. If you fall under suspicion again and your code is requested and you fail to submit your code within 24 hours, your Zindi account will be disabled and you will be disqualified from winning any challenges or Zindi points with any other account.

Join the largest network for

data scientists and AI builders