UmojaHack Africa 2022: African Snake Antivenom Binding Challenge (ADVANCED)

Prepare for UmojaHack by

- Sign up for the event: bit.ly/uha22-signup

- Join our discord channel

- Enroll in your first competition

- Update your profile to show your university or school

- Create a team

- Practice on the Beginner Practice Challenge

- Read this article on how to make your first submission

Snake bites cause more than 100 000 deaths and more than 300 000 permanent disfigurements every year, caused by toxin proteins in snake venom. Injury and death from snake bites is more common in poor socioeconomic conditionss and has historically received limited funding for discovery, development, and delivery of new treatment options.

Currently, the only effective treatment against snakebite envenoming consists of unspecified cocktails of polyclonal antibodies extracted from immunised production animals.These antivenom antibodies work unreliably across different species of snake, and until recently little data existed on which of the 2000+ snake venom toxins they bind, as well as where the binding of the antibody to the toxin occurs.

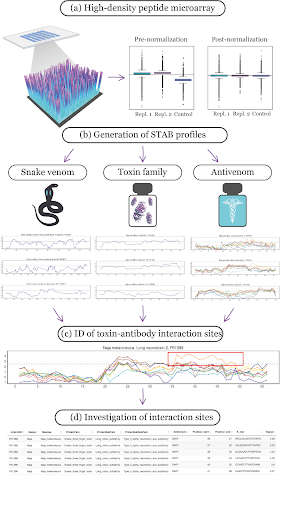

To address this issue, a recent study used high-density peptide microarray (HDPM) technology with all currently available African snake toxin protein sequences, together with eight commercial antivenoms in clinical use in Africa to better understand how antivenoms bind to specific snake venom toxins.

In this challenge, you are tasked to build a machine learning model to predict how strongly a given string of amino acids from a snake venom toxin protein binds to eight different commercial antivenom antibodies.

Your solutions will help predict what other toxins a given antivenom could be effective against (or neutralise), rapidly improving patient treatment. In the long term, this work leads to a whole new generation of snake bite treatments based on specific engineered antibodies - understanding where antibodies might bind a given toxin will ultimately contribute to this work.

Watch this video for a walk-through of the starter notebook and for interesting insights.

About InstaDeep (www.instadeep.com)

InstaDeep Ltd is an EMEA leader in decision-making AI products for the Enterprise, with headquarters in London, and offices in Paris, Tunis, Lagos, Dubai and Cape Town. With expertise in both machine intelligence research and practical business deployments, the Company provides a competitive advantage to its partners in an AI-first world. Leveraging its extensive know-how in GPU-accelerated computing, deep learning and reinforcement learning, InstaDeep has built products and solutions that tackle the most complex challenges across a range of industries. The firm’s hands-on approach to research, combined with a broad spectrum of clients, ensures an exciting and rewarding environment to work and thrive in. InstaDeep has also developed collaborations with global leaders in the Artificial intelligence ecosystem, such as Google DeepMind, Nvidia and Intel.

A library of unique 16-mer peptides was designed in silico for microarray synthesis. The library comprised peptides derived from toxin protein sequences representing 40 snake species and 481 proteins representing 23 protein families. Protein fasta files were downloaded from the UniProt database, the sequences were trimmed for signal peptides and all unknown amino acid residues (X) were substituted by comparison with homologue protein sequences also included in the study. The 16-mer peptides were then constructed by splitting all toxins into k-mers of 16 amino acids by tiling at every amino acid position.

Thereafter, binding signals of each antivenom were tested by adding a fluorescently labeled detection antibody alongside the antivenom. This allowed the visualization of which and how many toxin epitopes an antivenom bound, but also how strong (signal intensity). The signals were normalised and are represented as Z-score - toxin peptides with a normalized signal score higher than 2.5 (corresponding to a p-value of maximum 0.05) were considered significant. Further information can be found in the publication (https://journals.plos.org/plosntds/article?id=10.1371/journal.pntd.0008366).

The error metric for this competition is the Root Mean Squared Error.

For every row in the dataset, submission files should contain 2 columns: ID and Signal.

Your submission file should look like this:

ID Signal A8YPR6_Bioclone_14 -0.7 A8YPR6_Bioclone_15 0.72 A8YPR6_Bioclone_16 -0.43

1st prize: $1200

2nd prize: $1000

3rd prize: $800

PLEASE NOTE that Zindi will only award ONE PRIZE PER UNIVERSITY; in other words, if more than one individual or team from a specific university would be eligible to win a prize, we will award a prize to the higher-ranked individual or team only.

Country prize: $100 (given to the highest ranking user/team from a specific country that didn’t win any other prize) Country winners will be determined by competition ranking relative to the number of users on the leaderboard, adjusted by a factor. Your percentage placement will be multiplied by 3 (advanced challenge), 2.4 (intermediate challenge) or 1.8 (beginner challenge.) The user with the highest adjusted score will be the country winner.

All times are GMT

Saturday 19 March

- Join the session here: https://us02web.zoom.us/j/85966888212?pwd=UzlGSFBrZ1ZRd3pWUEJqTHo3SVZ6UT09

- Session password: Uhack22

08:00 - 09:15 Welcome to the event, sponsors’ address, orientation and introduction to the challenges on Zoom.

09:15 Competition launch

Key times to note:

- 12:15 - 12:25 - Lunch, check-in and live chats on Discord/Zoom

- 12:25 - 12:35 - Introduction to Google Colab by Chris Perry, Product Manager at Colab

- 12:35 - 12:55 - Technical conversations about the challenge

- 17:10 - 17:15 - Check in from Tanzania with Tony Mipawa

- 17:15 - 17:25 - Sponsor address by Dmitry Gordeev from H20.ai

- 17:30 - UmojaHack Africa 2022 stream sign off

Hack all day and all night! Questions and issues during this time can be addressed via the Discord channel.

Sunday 20 March

- Join the session here: https://us02web.zoom.us/j/88299327429?pwd=VWl5RHRCZis0NjNOM2o1NjNGczErZz09

- Session password: Uhack22

08:00 - 08:15 Day 2 Morning check in and schedule for the day

17:00 Submissions to Zindi close

17:00 - 17:30 Announcements of winners, prizes etc, the private leaderboard will be revealed at 17:30.

Key times to note:

- 08:10 - 08:15 - Introduction to the day by Sam Masikini

- 08:15 - 08:25 - Sponsor address by Moustapha Cisse from Google AI

- 08:25 - 08:35 - Sponsorship address by Karim Beguir from Instadeep

- 08:35 - 08:40 - Check in from Nigeria with Tejumola Asanloko

- 12:00 - 12:10 - Sponsorship address by Shaun Dipnall from Explore Data Science Academy

- 12:10 - 12:15 - Check in from Sudan with Lina Yasin

African Snake Anti-venom Binding Challenge (ADVANCED)

Monthly Insurance Claim Prediction Challenge (INTERMEDIATE)

Faulty Air Quality Sensor Challenge (BEGINNER)

Country Winners

- Algeria: TheSun00000

- Botswana: Team 00000

- Benin: CapitainData

- Burundi: Abel_Swift

- Cameroon: Niyo_D_JC

- Chad: Prag

- Egypt: AiadAsaad

- Eswatini: DSamo

- Ethiopia: Team Bomb Squad

- Ghana: 100i

- Guinea: Abdoulayegk

- Côte d'Ivoire: Team HACK-DATA

- Madagascar: heritianadanielina

- Malawi: Team Unima Tyrannosaurid

- Morocco: AzzimTaha

- Mozambique: Team MozTeam

- Namibia: CENNA

- Niger: maman_hach

- Congo: Team UnikinHack

- Rwanda: xcv

- Senegal: FutureApp

- Sudan: Team AoT

- Tanzania: AI_Avenger

- Togo: Abibi

- Uganda: Team zima

- Zambia: prudykav

- Zimbabwe: Team Driven by Data

Teams and collaboration

You may participate in competitions as an individual or in a team of up to four people. When creating a team, the team must have a total submission count less than or equal to the maximum allowable submissions as of the formation date. A team will be allowed the maximum number of submissions for the competition, minus the total number of submissions among team members at team formation. Prizes are transferred only to the individual players or to the team leader.

Multiple accounts per user are not permitted, and neither is collaboration or membership across multiple teams. Individuals and their submissions originating from multiple accounts will be immediately disqualified from the platform.

Code must not be shared privately outside of a team. Any code that is shared, must be made available to all competition participants through the platform. (i.e. on the discussion boards).

The Zindi data scientist who sets up a team is the default Team Leader but they can transfer leadership to another data scientist on the team. The Team Leader can invite other data scientists to their team. Invited data scientists can accept or reject invitations. Until a second data scientist accepts an invitation to join a team, the data scientist who initiated a team remains an individual on the leaderboard. No additional members may be added to teams within the last hour of a hackathon.

The team leader can initiate a merge with another team. Only the team leader of the second team can accept the invite. The default team leader is the leader from the team who initiated the invite. Teams can only merge if the total number of members is less than or equal to the maximum team size of the competition.

A team can be disbanded if it has not yet made a submission. Once a submission is made individual members cannot leave the team.

All members in the team receive points associated with their ranking in the competition and there is no split or division of the points between team members.

Datasets and packages

The solution must use publicly-available, open-source packages only.

You may use only the datasets provided for this competition. Automated machine learning tools such as automl are not permitted.

You may use pretrained models as long as they are openly available to everyone.

The data used in this competition is the sole property of Zindi and the competition host. You may not transmit, duplicate, publish, redistribute or otherwise provide or make available any competition data to any party not participating in the Competition (this includes uploading the data to any public site such as Kaggle or GitHub). You may upload, store and work with the data on any cloud platform such as Google Colab, AWS or similar, as long as 1) the data remains private and 2) doing so does not contravene Zindi’s rules of use.

You must notify Zindi immediately upon learning of any unauthorised transmission of or unauthorised access to the competition data, and work with Zindi to rectify any unauthorised transmission or access.

Your solution must not infringe the rights of any third party and you must be legally entitled to assign ownership of all rights of copyright in and to the winning solution code to Zindi.

Submissions and winning

You may make a maximum of 75 submissions per day.

You may make a maximum of 150 submissions for this competition.

Before the end of the competition you need to choose 2 submissions to be judged on for the private leaderboard. If you do not make a selection your 2 best public leaderboard submissions will be used to score on the private leaderboard.

During the competition, your best public score will be displayed regardless of the submissions you have selected. When the competition closes your best private score out of the 2 selected submissions will be displayed.

Zindi maintains a public leaderboard and a private leaderboard for each competition. The Public Leaderboard includes approximately 40% of the test dataset. While the competition is open, the Public Leaderboard will rank the submitted solutions by the accuracy score they achieve. Upon close of the competition, the Private Leaderboard, which covers the other 60% of the test dataset, will be made public and will constitute the final ranking for the competition.

Note that to count, your submission must first pass processing. If your submission fails during the processing step, it will not be counted and not receive a score; nor will it count against your daily submission limit. If you encounter problems with your submission file, your best course of action is to ask for advice on the Competition’s discussion forum.

If you are in the top 5 at the time the leaderboard closes, we will email you to request your code. On receipt of email, you will have 2 hours to respond and submit your code following the submission guidelines detailed below. Failure to respond will result in disqualification.

If your solution places is one of the prize winning solutions, you will be required to submit your winning solution code to us for verification, and you thereby agree to assign all worldwide rights of copyright in and to such winning solution to Zindi.

If two solutions earn identical scores on the leaderboard, the tiebreaker will be the date and time in which the submission was made (the earlier solution will win).

If the error metric requires probabilities to be submitted, do not set thresholds (or round your probabilities) to improve your place on the leaderboard. In order to ensure that the client receives the best solution Zindi will need the raw probabilities. This will allow the clients to set thresholds to their own needs.

The winners will be paid via bank transfer, PayPal, or other international money transfer platform. International transfer fees will be deducted from the total prize amount, unless the prize money is under $500, in which case the international transfer fees will be covered by Zindi. In all cases, the winners are responsible for any other fees applied by their own bank or other institution for receiving the prize money. All taxes imposed on prizes are the sole responsibility of the winners. The top 3 winners or team leaders will be required to present Zindi with proof of identification, proof of residence and a letter from your bank confirming your banking details.Winners will be paid in USD or the currency of the competition. If your account cannot receive US Dollars or the currency of the competition then your bank will need to provide proof of this and Zindi will try to accommodate this.

Payment will be made after code review and an introductory call with the host.

You acknowledge and agree that Zindi may, without any obligation to do so, remove or disqualify an individual, team, or account if Zindi believes that such individual, team, or account is in violation of these rules. Entry into this competition constitutes your acceptance of these official competition rules.

Zindi is committed to providing solutions of value to our clients and partners. To this end, we reserve the right to disqualify your submission on the grounds of usability or value. This includes but is not limited to the use of data leaks or any other practices that we deem to compromise the inherent value of your solution.

Zindi also reserves the right to disqualify you and/or your submissions from any competition if we believe that you violated the rules or violated the spirit of the competition or the platform in any other way. The disqualifications are irrespective of your position on the leaderboard and completely at the discretion of Zindi.

Please refer to the FAQs and Terms of Use for additional rules that may apply to this competition. We reserve the right to update these rules at any time.

UmojaHack rules

- Teams may win in only one challenge category, and are encouraged to enter only one.

- Zindi will only award one prize per university, academy or educational institution; in other words, if more than one individual or team from a specific organisation would be eligible to win a prize, we will award a prize to the higher-ranked individual or team only.

- Participants may compete individually or in teams of up to four people. Members of teams need to come from the same university, academy or educational institution.

- You may not win if you have been employed, interned or been associated with the host(s) or sponsor(s) of this challenge.

- All participants in the hackathon must be registered students (undergraduate or graduate) at the university, academy or educational institution they represent. Lecturers, university staff, and alumni may participate in a mentorship or advisory capacity only.

- Teams and individuals may not collaborate or share information with one another.

- All solutions must use machine learning, but teams are permitted and encouraged to use exploratory data analysis in building their solutions.

- All solutions must use publicly-available, open-source packages and languages only.

- Solutions must use only the allowed and available datasets.

- Participants caught cheating or breaking any competition rules will be immediately disqualified from the competition.

- Universities caught cheating or allowing teams or participants to cheat will be immediately disqualified from the competition.

- The winning code must be submitted to Zindi for review and validation immediately at the close of the competition. In the interest of logistics, code review will take place only after the competition has closed and winners have been announced.

Important Note: By entering this hackathon you acknowledge that Zindi reserves the right to disqualify winning teams before, during, and after the competition. Code review will take place after the winners have been announced but before prize money has been paid; if upon reviewing the winning code, it shows that the winning team did not follow competition rules in any way whatsoever, Zindi may disqualify the team and their university. This disqualification and the reasons for it will be made public, and the disqualified team will not be eligible for receiving the prizes, regardless of any earlier prize announcements. Zindi reserves the right to revoke prizes at any stage if there is evidence of teams or universities breaking any competition rules or otherwise behaving in a way that brings Zindi into disrepute. In this case, the prizes will be awarded to the next highest-ranked eligible team and university. All decisions made by Zindi are final.

Reproducibility of submitted code

- If your submitted code does not reproduce your score on the leaderboard, we reserve the right to adjust your rank to the score generated by the code you submitted.

- If your code does not run you will be dropped from the top 10. Please make sure your code runs before submitting your solution.

- Always set the seed. Rerunning your model should always place you at the same position on the leaderboard. When running your solution, if randomness shifts you down the leaderboard we reserve the right to adjust your rank to the closest score that your submission reproduces.

- We expect full documentation. This includes:

- All data used

- Output data and where they are stored

- Explanation of features used

- A requirements file with all packages and versions used

- Your solution must include the original data provided by Zindi and validated external data (if allowed)

- All editing of data must be done in a notebook (i.e. not manually in Excel)

- Environment code to be run. (e.g. Google Colab or the specifications of your local machine)

- Expected run time for each notebook. This will be useful to the review team for time and resource allocation.

Data standards:

- Your submitted code must run on the original train, test, and other datasets provided.

- If external data is allowed, external data must be freely and publicly available, including pre-trained models with standard libraries. If external data is allowed, any data used should be shared with Zindi to be approved and then shared on the discussion forum. Zindi will also make note of the external data available on the data page.

- Packages:

- You must submit a requirements file with all packages and versions used.

- If a requirements file is not provided, solutions will be run on the most recent packages available.

- Custom packages in your submission notebook will not be accepted.

- You may only use tools available to everyone i.e. no paid services or free trials that require a credit card.

Consequences of breaking any rules of the competition or submission guidelines:

- First offence: No prizes for 6 months and 2000 points will be removed from your profile (probation period). If you are caught cheating, all individuals involved in cheating will be disqualified from the challenge(s) you were caught in and you will be disqualified from winning any competitions for the next six months and 2000 points will be removed from your profile. If you have less than 2000 points to your profile your points will be set to 0.

- Second offence: Banned from the platform. If you are caught for a second time your Zindi account will be disabled and you will be disqualified from winning any competitions or Zindi points using any other account.

- Teams with individuals who are caught cheating will not be eligible to win prizes or points in the competition in which the cheating occurred, regardless of the individuals’ knowledge of or participation in the offence.

- Teams with individuals who have previously committed an offence will not be eligible for any prizes for any competitions during the 6-month probation period.

Monitoring of submissions

- We will review the top 5 solutions of every competition when the competition ends.

- We reserve the right to request code from any user at any time during a challenge. You will have 24 hours to submit your code following the rules for code review (see above). Zindi reserves the right not to explain our reasons for requesting code. If you do not submit your code within 24 hours you will be disqualified from winning any competitions or Zindi points for the next six months. If you fall under suspicion again and your code is requested and you fail to submit your code within 24 hours, your Zindi account will be disabled and you will be disqualified from winning any competitions or Zindi points with any other account.

Join the largest network for

data scientists and AI builders